Hello my friend,

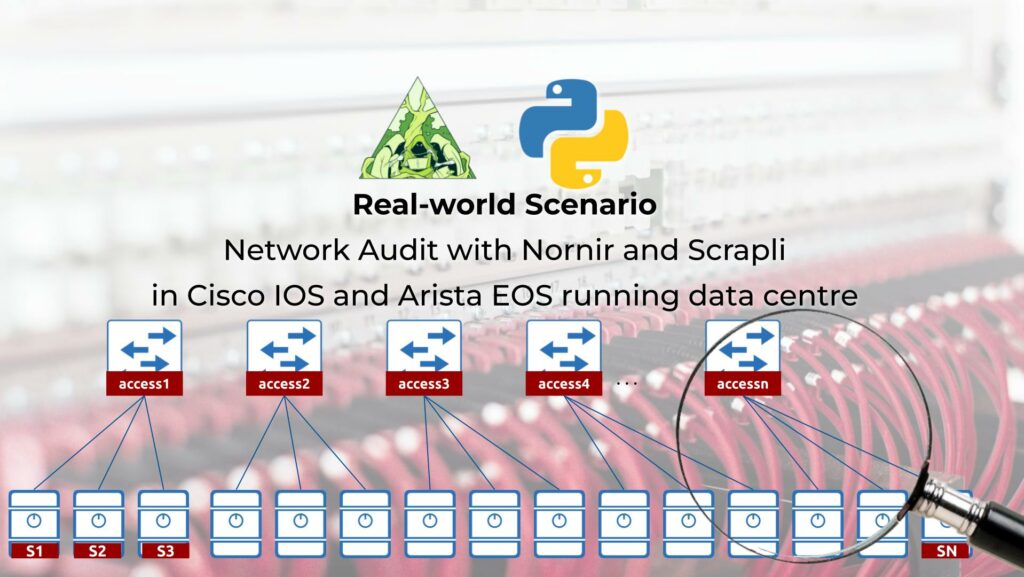

Today we are going to discuss a real-life experience, how network automation helped us to save a lot of time and significantly improve a quality of the medium size data centre. You will learn about the problem, which audit was to solve and how Python leveraging Nornir and Scrapli solved it.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

Can Automation Help with Audits?

We, humans, are incredible creatures. We can create. We can write songs and compose music; we can invent new drugs and find new materials. We can develop new software and tools. However, in order to be able to do that, we need to have a free time and not to worry about anything. That’s why we need to rely on different tools, which can do routine tasks requiring a lot of concentration at least as good as we, humans, can. Probably, even better than we. Audit is one of such tasks, and in IT world it definitely can and shall be automated. Python provides a huge variety of tools for network and IT audits, which covers all stages (planning inventory for audit, collecting data, processing, and building reports).

And we are keen to teach you how to do that!

We offer the following training programs:

- Zero-to-Hero Network Automation Training

- High-scale automation with Nornir

- Ansible Automation Orchestration with Ansble Tower / AWX

During these trainings you will learn the following topics:

- Success and failure strategies to build the automation tools.

- Principles of software developments and tools for that.

- Data encoding (free-text, XML, JSON, YAML, Protobuf)

- Model-driven network automation with YANG, NETCONF, RESTCONF, GNMI.

- Full configuration templating with Jinja2 based on the source of truth (NetBox).

- Best programming languages (Python, Bash) for developing automation, configuration management tools (Ansible) and automation frameworks (Nornir).

- Network automation infrastructure (Linux, Linux networking, KVM, Docker).

- Orchestration of automation workflows with AWX and its integration with NetBox, GitHub, as well as custom execution environments for better scalability.

Moreover, we put all mentions technologies in the context of real use cases, which our team has solved and are solving in various projects in the service providers, enterprise and data centre networks and systems across the Europe and USA. That gives you opportunity to ask questions to understand the solutions in-depts and have discussions about your own projects. And on top of that, each technology is provided with online demos and labs to master your skills thoroughly. Such a mixture creates a unique learning environment, which all students value so much. Join us and unleash your potential.

Brief Description

The audit of any production environment is a challenging task. Why so? Well, if your environment is well documented and you keep track of all changes, there is no need to do an audit. However, if the audit is needed, it means that documentation is not accurate (or it is just missing) and you would need to collect the information from the real devices and somehow analyse it and map with the information what you know. As such, we can split the whole audit process in few steps:

- Define a scope. First of all, before starting the audit, you need to know what actually you need to solve. Is that finding the outdated software? Is that finding particular hosts? Is that analysing configuration in general or of a specific protocol or service?

- Prepare the inventory for audit. In other words, you need to collate a list of the devices, which you need to pull information from.

- Make sure devices are reachable. This step means that you ensure you have the accurate credentials and permissions to collect the information from network devices. In addition to that you validate that there is a network reachability to devices, meaning all VPN and firewall questions are solved.

- Audit itself. Finally you collect the required information. Depending on a scope of the audit, it can be only configuration, only operational data (or its subset) or both.

- Analysis and report. Once the information is collected, you start analysing it based on the originally defined scope. When the findings are done, the final audit report is generated and the follow up steps can be done.

The audit steps are fairly generic and aren’t related to network specifically. Following the example, you would see how we approached it and how Python helped us to do the job well.

Preparation to Audit

Step #1. Where Are My Hosts, Man?

The scope for our audit was to find all the servers connected to the data centre network and map their hostnames, IP and MAC addresses, and interfaces on the access switches, where they are connected. Our input information is:

- All the names of serves existing in the data centre, but without IP addresses and possibilities to connect to them.

- All the names, SW versions, management IP addresses and credentials of the network switches in the data centres.

There are some further specifics of the environment we need to deal with:

- For security reasons, there are no LLDP or CDP running from servers to switches. As such, we cannot rely on this data to find mapping.

- The SW version of the network devices are fairly old and we cannot upgrade it. So we cannot use any advanced programmable interfaces, such as NETCONF or GNMI.

- The description of the network interfaces at access switches towards connected servers is reasonably accurate, so we could rely on it to identify the servers.

Enrol to our network automation trainings to learn about NETCONF, GNMI, and other programmable interfaces for next generation network automation.

As said, we analysing the legacy data centre, so you could see the sample topology of the network:

Step #2. What Is the Plan?

Once the audit scope is clear, we started thinking, what are the pieces of information we need to accomplish it:

- We need to collect all IP addresses of all devices connected to the network. This can be done via polling the ARP table from the core switches in the network, which terminates IP connectivity for all servers. If all the servers are up, we shall be able to see all the IP addresses and MAC addresses of all hosts. Obviously, if not, some information would be missing.

- We need to collect all the MAC addresses for all the devices connected to access switches on access ports (i.e., content of the MAC address table). The good thing is that in our cases the setup is fairly standard, so we can easily identify, which interfaces are access ports and which are core-facing uplinks.

- Finally, we need to collect the description of all the interfaces from access switches to be able to map IP address to MAC addresses to access switch hostname to a description of the interface.

In addition to that, we decided to also to collect information about VLANs to map the IP address to VLAN to increase the level of details in our report.

Step #3. How Will We Approach IT?

We decided to leverage what we are teaching our students: we decided to use Python to create a tool, which will collect and analyse for us the required date. Namely, we decided to use Nornir automation framework, as it allows to offload heavy lifting of threaded devices’ interaction to a purpose build software.

Nornir itself, though, doesn’t have any built-in modules to interact with network devices, so we had a variety of options to deal with legacy devices:

- Netmiko

- NAPALM

- Scrapli

First of all, we tried to use NAPALM. In theory, NAPALM is very good for a collection of normalised data for a basic sets. It shall be able to collect all the date we need:

- ARP tables from core switches

- MAC address tables for access switches

- Interfaces description from access switches

So we did a quick test with Nornir and NAPALM, but it appeared that it was not able to retrieve ARP table from the core switches. Despite they run Arista EOS, the output of the ARP table was completely empty. As such, we decided to use Scrapli as it supports network operating systems from the vendors we have in network: Arista EOS and Cisco IOS.

Developing Python Tool for an Audit

Step #1. What Are My Switches?

We were provided with inventory in format of a wiki page, where the IP addresses and software versions were listed. As such, there were no need to build an integration with NetBox or any other IPAM system. That allowed us to use SimpleInventory plugin for Nornir, to manually create a list of hosts. Here is the sample:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

---

---

access1:

hostname: 10.0.255.11

groups:

- access

access2:

hostname: 10.0.255.12

groups:

- access

access3:

hostname: 10.0.255.13

groups:

- access

!

! SOME OUTPUT IS TRUNCATED FOR BREVITY

!

core1:

hostname: 10.0.255.253

groups:

- core

...

As you can see, we have split out hosts in two groups to simplify their mapping to network operating systems:

2

3

4

5

6

7

8

9

10

11

---

core:

platform: eos

data:

role: core

access:

platform: ios

data:

role: access

...

Finally, we created a file with defaults, which container credentials as well as scrapli configuration details:

2

3

4

5

6

7

8

9

10

11

12

---

username: test

password: test

## Add scrapli details

connection_options:

scrapli:

port: 22

extras:

ssh_config_file: True

auth_strict_key: False

...

The Nornir configuration file in overall looked for us as follows:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

---

inventory:

plugin: SimpleInventory

options:

host_file: "./inventory/hosts.yaml"

group_file: "./inventory/groups.yaml"

defaults_file: "./inventory/defaults.yaml"

logging:

enabled: True

runner:

plugin: threaded

options:

num_workers: 20

...

Step #2. The Core Audit Tool

The core consideration for us was to re-use as much as possible of the existing codebase. Therefore, we have used nornir_scrapli module with scrapli tasks to simplify data collection. The core code to collect data is as simple as this:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# Modules

from nornir import InitNornir

from nornir_scrapli.tasks import send_command

# Variables

config_file = "./config.yaml"

# Body

if __name__ == "__main__":

## Initiate Nornir

nr = InitNornir(config_file=config_file)

## Collect ARP from core

nr_core = nr.filter(role="core")

r1 = nr_core.run(task=send_command, command="show ip arp")

## Collect MAC from access

nr_access = nr.filter(role="access")

r2 = nr_access.run(task=send_command, command="show mac address-table")

## Collect Interface description

r3 = nr_access.run(task=send_command, command="show interface description")

Enrol to our Network Automation with Nornir training to master Python automation

The provided Python’s code snippet is self-explanatory, but we still would provide a bit more details:

- We create an object nr from the InitNornir class.

- Then we create a children object with core devices, and using send_task task from nornir_scrapli module we collect the output of ARP tables.

- Afterwards we create a children object with access devices and using the same approach collect output of MAC address tables and interfaces descriptions

The collected data needs to be post-processed and analysed. If we think abstractly, the data from all there tables has the same format:

- The data is presented as a semi-formatted table.

- There is a key field in each of the tables (IP address for ARP tables, MAC address for MAC address table, and interface name for description table).

- There are some important fields, which we need to extract information from.

As such, we decided to create a helper function, which would be restructuring in a Python dictionary the output of the table:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

"""

This helper functions allows to convery semi-formatted text into a structure

dictionary by choosing a key field and other important fields for nested data.

"""

result = {}

for hostname, entry_1_level in raw_input.items():

for entry_2_level in str(entry_1_level[0]).splitlines():

list_3_level = entry_2_level.split()

try:

if list_3_level[unique_field] not in result and list_3_level[unique_field] not in filter:

if "interface" not in mapping or not re.match(r'.*Po.*',list_3_level[mapping["interface"]]):

key_field = list_3_level[unique_field] if not add_host else f"{hostname},{list_3_level[unique_field]}"

result.update({key_field: {}})

for key_name, key_index in mapping.items():

value = f"{hostname},{list_3_level[key_index]}" if key_name == "interface" else list_3_level[key_index]

result[key_field].update({key_name: value})

except:

pass

return result

The key inputs for this table are:

- raw_input – Result object from the Nornir module

- unique_input – id of the column, which be used as a key for building dictionaries.

- mapping – dictionary, containing name of the keys and their positions, which shall be put as key/value pairs to a newly created dictionary.

- filter – list, which contains values, which we don’t want to include in a final dictionary (e.g., headers of the table, etc).

- add_host – possibility to add a hostname to the name of the key (needed to map interface in MAC tables to descriptions and switches).

This helper functions is used by two other functions, which are building ARP tables and then performs the full mapping:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

"""

This helper function performs conversion of the ARP table into a dictionary.

"""

return _normalise_data(raw_input=arp_table,

unique_field=0,

mapping={"mac": 2},

filter=["Address"])

def match_ip_mac_port_description(arp_table: dict, mac_table, interfaces_table) -> dict:

"""

This helper function performs conversion of the MAC address tables,

interfaces description table, and collected ARP in a singe dictionary.

"""

result = arp_table

normailed_mac_table = _normalise_data(raw_input=mac_table,

unique_field=1,

mapping={"vlan": 0, "interface": -1},

filter=["Entries", "mac", "EOF", "ffff.ffff.ffff"])

normalized_interface_table = _normalise_data(raw_input=interfaces_table,

unique_field=0,

mapping={"description": -1},

filter=["Interface"],

add_host=True)

for ip_entry, ip_var in arp_table.items():

to = {}

if ip_var["mac"] in normailed_mac_table:

pre_normalized_interface = normailed_mac_table[ip_var["mac"]]["interface"].split(",")

normalized_interface_name = pre_normalized_interface[0] + "," + pre_normalized_interface[1][0:2] + re.sub(r'^\D+(\d?/?\d+)$', r'\1', pre_normalized_interface[1])

if normalized_interface_name in normalized_interface_table and not re.match(r'.*Po.*', pre_normalized_interface[1]):

to= {

"switch": pre_normalized_interface[0],

"interface": pre_normalized_interface[1],

"vlan": int(normailed_mac_table[ip_var["mac"]]["vlan"]),

"server": normalized_interface_table[normalized_interface_name]["description"]

}

if not to:

to= {

"switch": None,

"interface": None,

"vlan": None,

"server": None

}

result[ip_entry].update(to)

return result

The first function get_unique_host() is straightforward and just produces a dictionary out of ARP table, whereas the second one match_ip_mac_port_description() maps all the data we need in our audit. Both of them relies on aforementioned _normalise_data() helper function.

We put these functions in a separate file to decouple them from a main business logic of our script and call them as follows:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

# Modules

from nornir import InitNornir

from nornir_scrapli.tasks import send_command

import json

# Local artefacts

import bin.helper_functions as hf

# Variables

config_file = "./config.yaml"

# Body

if __name__ == "__main__":

## Initiate Nornir

nr = InitNornir(config_file=config_file)

## Collect ARP from core

nr_core = nr.filter(role="core")

r1 = nr_core.run(task=send_command, command="show ip arp")

## Normalise ARP

arp_table = hf.get_unique_hosts(arp_table=r1)

## Collect MAC from access

nr_access = nr.filter(role="access")

r2 = nr_access.run(task=send_command, command="show mac address-table")

## Collect Interface description

r3 = nr_access.run(task=send_command, command="show interface description")

## Map all the data

final_mapping = hf.match_ip_mac_port_description(arp_table=arp_table,

mac_table=r2, interfaces_table=r3)

## Print results

print(f"{len(final_mapping)} results found:")

print(json.dumps(final_mapping, indent=4, sort_keys=True))

The core tool is ready and we can get that tested.

Run, Audit, Run!

Provided there is a network connectivity towards audited data centre, you can run the tool:

2

3

4

5

6

7

8

9

10

11

12

13

178 results found:

{

"192.168.1.11": {

"interface": "GigabitEthernet1/17",

"mac": "ac1f.6b21.ed22",

"server": "HYPERVISOR-1",

"switch": "access1",

"vlan": 100

}

,

...

}

We printed the output in a easy-readable JSON format just to validate that our audit works as proper. We ultimately build then an integration to populate a NetBox for this data centre in automated way, but this is out of the scope for the audit itself.

Further Consideration

Step #1. Credentials

Originally we put the credentials in the defaults.yaml as shown above, but we didn’t feel comfortable putting the password in a clear text in the file. Moreover, once we started thinking about publishing the repo, it was immediately a no-go option. So, we modified the code to be able to take the credentials from Linux environment or CLI. That significantly improved security, as it allowed to store credentials in the Linux environment and run the audit without extra typing yet maintaining security and putting credentials in the code.

Step #2. Control with Flags

One of the paradigm for software development is to control functionality with flags. This is related to publishing new features, yet also to the changing the way how the tool is executed itself (e.g., enabling the debug mode). First of all, we needed somehow signal to the audit tool, that the credentials shall be taken from the environment. So we introduced flag -c / –credentials, which allows you to specify, where credentials are specified. If it is omitted, tool takes it by default from the Nornir SimpleInventory plugin. We also slightly modified the output to show only the data we need…

Step #3. Data Lookup

Once the core functionality started working, we’ve got a request from a customer, whether the tool can help to find a specific IP or MAC in the network and trace it to the server without a need to connect to network devices. So we added a query flag -q / –query, which allowed to specify an IP address to look for in ip=x.x.x.x format or a MAC address in a format mac=aaaa.bbbb.cccc.

What Else Can Be Done?

Our goal was just to collect and map data in format, which we can use further in Python (dictionary). If we are to build a proper CLI tool, we may look at Pandas to build a simple and nice ASCII tables directly in CLI.

Examples in GitHub

You can find this and other examples in our GitHub.

Lessons Learned

The main lessons learned is that not all the time the things that shall work, works as expected. We planned to build this audit tool around NAPALM, but it unfortunately didn’t work as expected. Provided it works as we planned, our own codebases would have been much smaller. On the other hand, we proved that knowledge of automation and multiple frameworks and tool, which you get in our trainings, will allow you to be good in any situation.

Conclusion

Coming back to the point we stated in the beginning: tools are better than us, humans, for routine tasks. It would have easily taken a day or two for manual validation of the data for the size of this data centre, which just has a few hundreds of servers. Given the automation approach we have taken, it took us about 4 hours to create and test the audit tool leveraging Python and Nornir and 16 seconds to complete the audit. The clear savings are 4-12 hours, which we can spend on development of other automation frameworks. That’s what we, humans, are good at: creating new. Take care and good bye.

Need Help? Contract Us

If you need a trusted and experienced partner to automate your network and IT infrastructure, get in touch with us.

P.S.

If you have further questions or you need help with your networks, we are happy to assist you, just send us a message. Also don’t forget to share the article on your social media, if you like it.

BR,

Anton Karneliuk