Hello my friend,

Recently I was talking to a colleague from the network automation area, and during the discussion we touched a topic of NAPALM, and which role it plays today, and what may be its future. This discussion triggered me to think more about this topic and I decided to share thoughts with you.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

Will Network Automation Become Less Popular?

No, it won’t. Actually, it is quite opposite. It will be becoming even more important and it will be taking even more complicated forms, such as integration with Artificial Intelligence and Machine Learning (AI/ML) to help companies to reduce amounts and durations of downtimes. It doesn’t mean that traditional network technology knowledge are less important: they absolutely are. However, the automation is unavoidable and you have to know it in order to stay in the profession. And pretty much, like with network technology you start with fundamentals of protocols before starting configuring them, in the same way in the automation and software development: you should start with fundamentals in order to succeed. And we are committed to help you with that.

We offer the following training programs:

- Zero-to-Hero Network Automation Training

- Automation with Nornir (2nd step after advanced network automation)

- Automation Orchestration wth AWX

During these trainings you will learn the following topics:

- Success and failure strategies to build the automation tools.

- Principles of software developments and tools for that.

- Data encoding (free-text, XML, JSON, YAML, Protobuf)

- Model-driven network automation with YANG, NETCONF, RESTCONF, GNMI.

- Full configuration templating with Jinja2 based on the source of truth (NetBox).

- Best programming languages (Python, Bash) for developing automation, configuration management tools (Ansible) and automation frameworks (Nornir).

- Network automation infrastructure (Linux, Linux networking, KVM, Docker).

- Orchestration of automation workflows with AWX and its integration with NetBox, GitHub, as well as custom execution environments for better scalability.

Anton has a very good and structured approach to bootstrap you in the network automation field.

Angel Bardarov @ Telelink Business Services

Moreover, we put all mentions technologies in the context of the real use cases, which our team has solved and are solving in various projects in the service providers, enterprise and data centre networks and systems across the Europe and USA. That gives you opportunity to ask questions to understand the solutions in-depts and have discussions about your own projects. And on top of that, each technology is provided with online demos and you are doing the lab afterwards to master your skills. Such a mixture creates a unique learning environment, which all students value so much. Join us and unleash your potential.

Multivendor Automation Challenges

Networks in the real world are often built using the hardware and/or software from different vendors or at least different flavours from the same one. For example, you may have a data centre network running leafs from Arista and spines for Junipers (or vice versa). Or at least, you may have one data centre running entire Nokia SR Linux and another data centre with Cisco Nexus. You may also have a DCI built between data centre using Cisco ASRs, or Nokia SR 7×50, or some Huawei, or something else.

Reasons for Multivendor Networks

Why is that happening? What are the main drivers to use equipment from multiple vendors in the network. The question is quite a complex, as there is no a single answer. Choice of a network vendor is always a trade off. However, we won’t be far from truth by saying that typically such a decision is driven by one (or multiple) of the the following factors:

- Cost. In a formal language, this would be known as TCO (Total cost of ownership), which is a summary of all expenses the company will have in regards of something (in our case this something is a network equipment) over certain period of time. TCO includes the CAPEX part, which is directly the price the company pays to purchase the hardware the day one, and the OPEX part, which is a collection of support and maintenance costs, team education, integration with other systems, etc. The financial magicians in big corporate are usually very proficient in converting OPEX to CAPEX for the purpose of financial accounting, but that is irrelevant for our conversation. In short, the lower TCO, the better and the more preferable the choice for the solution will be.

- Time to market. It could be that there are a good prices available; however, the delivery of the hardware (or new software version) may be taking unreasonable long for the already existing vendor and, therefore, the new vendor of chosen.

- Features and Specialization. One vendor may be very good in the transport technologies (e.g., IP/MPLS core or backhaul network, Segment Routing, Traffic Engineering, etc), another one is better in security (FW, IPS/IDS, WAF) space, whereas the the third is starring in the services (e.g., BNG).

- Stability and Risks. Easy categories are over and we are starting with some more tricky ones. All the major vendors had in their history very bad products (HW and/or SW), which cost a lot of headache to them and their customers. Having only one vendor in the network means that the customer’s dependence on the vendor is very high and in case something happens to the vendor and/or product, the operational impact on the customer’s network will be tremendous.

- Vendor lock. Brining more and more products from the same vendor is a double-edge sword: on the one hand, the customer may benefit from some end-to-end solutions, predominantly in the network management (NMS) space; on the other hand, it becomes incredibly hard to change the direction, if the customer and the vendor start having non-aligned views on the further development.

All that leads often to dual (or triple) vendor strategy, which means that customers bring to network the devices doing the same role (e.g., PE routers), from different vendors to balance the risk of stability and vendor lock-in and to have a better leverage for the commercial negotiations. This, though, brings some operational complexity.

Complexity of Multivendor Networks

For the purpose of this blogpost, we’ll put aside the administrative complexity (e.g., vendor management, contracts, support, etc) and will focus solely on the operational issues. What are they?

The first and foremost, the network devices from different vendors run different network operating systems (NOS). For example, Cisco ASR 9000 or Cisco NCS 5500 routers run Cisco IOS XR, Nokia SR 7×50 routers run Nokia SR OS, Juniper MX routers run Juniper JUNOS. What does it mean for the end user? Let’s take a closer look:

- Different syntax. The CLI structure of different operating systems are different. In a nutshell, you will use one set of commands to configure and validate the configuration some feature (e.g., your routing protocol – BGP) in one NOS and different set in another NOS. In fact, each NOS has its own set of commands to do so.

- Different operational practices. Some NOS’s have two stage commit process (i.e., you type the configuration and then have a chance to review before applying that), whereas others have a single stage commit and the commands are applied immediately after typing that. Some NOS’s require to save the configuration, whilst others do that automatically.

- Different naming conventions. One NOS may have the naming convention of the interfaces after their physical capabilities (e.g., GigabitEthernet0/0/0/0, et-0/0/0), whereas other may have decoupled interface name from its physical parameters (e.g., interface to_EOS mapped to port 1/1/c1/1).

All in all, it leads to the fact that network engineers shall be skilled in multiple vendors in order to be able to operate such a network. It is absolutely possible to find and hire (or to grow) such engineer; however, it will take some time.

With the drive of the IT world towards self-service solutions, the automation of networks becomes important, as stated before. Various networking vendors offer solutions, which normally suitable to automate only some parts of their portfolio, whereas the multivendor automation remains a big challenge… Are there any solutions available?

Multivendor Automation Solutions

Yes, they are some. However, before digging into them, let’s formulate what we actually we mean by the “multivendor network automation”.

In short, the idea behind the multivendor network automation is very simple: we want to be able to manage multiple network devices from different vendors (all we have in network) in a same way using same commands and having the same functionality (e.g., two-stage commit, rollback option, etc).

Once it is clear, what we want to achieve, let’s see what do we have available.

Both solution relies on Python –> Join our Zero-to-Hero Network Automation Training to learn it in-depth.

NAPALM

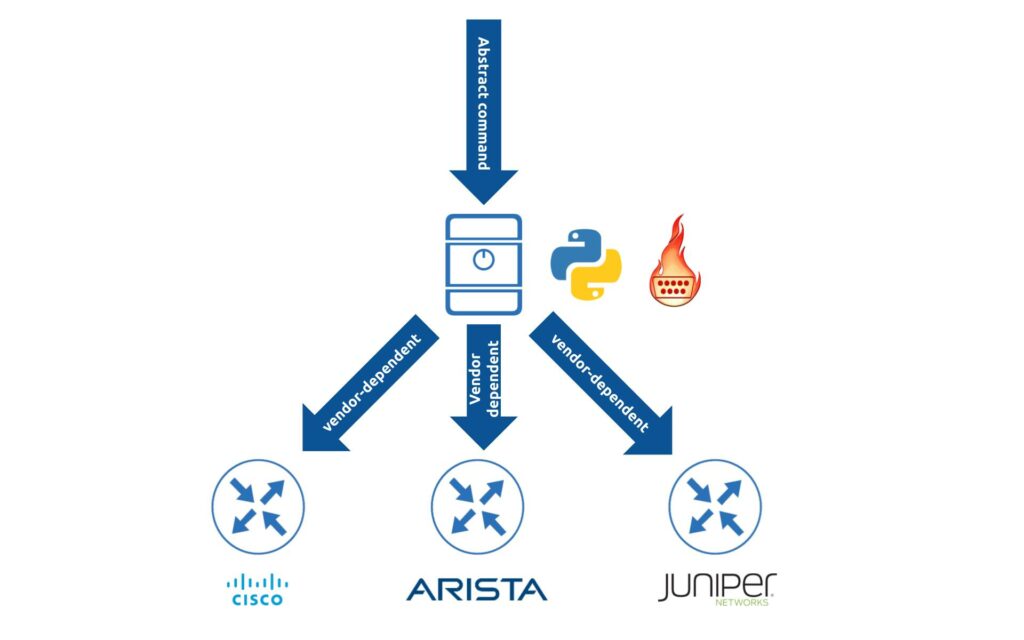

NAPALM stands for Network Automation and Programmability Abstraction Layer with Multivendor support. It a nutshell, this is a Python library, which allows you to interact with the network device, perform a request in a vendor abstract format and receive back a structured data. NAPALM started its way back in past, when the model-driven automation (automation based on YANG modules and NETCONF, RESTCONF, GNMI) was either not net existing or existing in the very limited scope. As such, NAPALM took approach to do the heavy-lifting of different vendors CLI syntaxes in the library itself. Take look at the following picture, which explains how NAPALM operates from the 1000 feet view:

At a high level:

- NAPALM has internal set of methods, objects in classes in Python, which are the same for all the vendors. The purpose of them is to allow the user to make the request in a vendor independent manner towards the NAPALM (e.g., get_arp_table()), and your Python script with NAPALM will translate that in the set of commands, which the destination host supports.

- After that, NAPALM will connect to the network device and push the request in the vendor-dependent format. It is worth to outline, that the connection is different for different network operating systems: for Arista NAPALM uses eapi, for Cisco IOS XR – XML API, etc.

- Once NAPALM receives the response from the network device in the vendor dependent format, it will perform parsing to normalise the data and return back to the user in this normalised way.

It may sound straightforward. However, by this time you already may have two questions.

Question #1. If NAPALM performs the request transformation to a vendor specific request, which NOS are supported by it?

Originally, NAPALM supports 5 network operating systems:

- Cisco IOS / IOS XE

- Cisco NX OS

- Cisco IOS XR

- Juniper JUNOS

- Arista EOS

These drivers are called the core drivers. However, with the time, there were more drivers developed for various network operating systems by the community. Check there, if your system is supported.

Question #2. If NAPALM performs the request transformation, which requests it can transform?

The list of supported requests is available in the official documentation. It contains some core data sets (ARP table, MAC table, Routing Table, LLDP neighbors, BGP configuration and states). On top to that it allows you to execute any arbitrary CLI command (that obviously will be not vendor independent).

OpenConfig

Let’s take a look at another approach, how else the same multivendor automation tasks can be solved.

Approximately at the same time, when NAPALM was actively developing (approx 2015-2016), finally in the industry the model-driven automation started taking off. Model-driven means that there is a certain data model (data models), which defines how the configuration and operational states of the network devices can be represented in a for of hierarchal key-value pairs.

You may think “Wait a minute, NAPALM has structured data sets, you told us”. Indeed, NAPALM is a Python library and it operates within the Python with its attributes of programming languages, such as structured data. However, the network devices, in a way how NAPALM communicates to them, don’t have a structured model -> It relies in many cases on the CLI (or CLI-ish) output; whereas in the model-driven automation, the data models are deployed directly on network devices and communication to devices is conducted in a structured approach directly, without any transformation, using one of the suitable protocols: NETCONF, RESTCONF, GNMI.

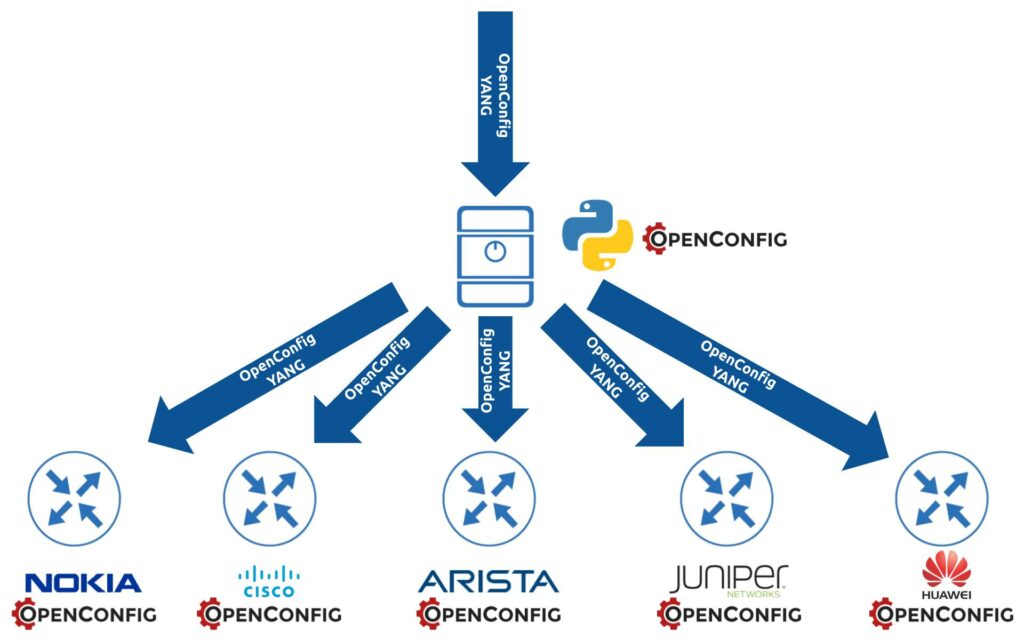

So, this is a data model, expressed in YANG format, defines what actually you can configure on or retrieve from the router. And this is where OpenConfig enters the stage. OpenConfig is a collection of vendor independent YANG modules, which covers a wide range of the device operational characteristics and, therefore, if implemented in network operating systems, allows to get end-to-end vendor-agnostic automation:

At our Network Automation Training we covers all the details of the OpenConfig and help you start using it.

At a high level:

- The prerequisite is that OpenConfig YANG modules (in full or partially) are implemented in network operating systems.

- You prepare request directly in OpenConfig format and sends via NETCONF, RESTCONF, or GNMI (whatever is supported by the NOS) to the device and receives the output in the same format.

- Same OpenConfig YANG modules can be used even for the telemetry streaming.

Probably, it worth to answer same questions as before.

Question #1. Which NOS are supported by OpenConfig?

OpenConfig was originally created by Google and is used to manage the network in their data centres. Therefore, it was/is an entry criteria for vendors to start doing business with Google, which has, probably, the biggest network in the world and, therefore, a big buying power. That’s why, the list of the vendors is relatively big:

- Cisco IOS XR

- Cisco NX OS

- Juniper JUNOS

- Nokia SR OS

- Huawei

- Arista EOS

- Arrcus OS

- etc

However…

Question #2. What is covered by OpenConfig YANG modules?

The Google a network topology in its data centres, which are suitable for hyper scalers, but are not suitable for small-medium (and even large) enterprises. That formed the original focus of the OpenConfig: connectivity and routing. The things such as IP/MPLS VPN and EVPN for a long period of time was completely out of scope for OpenConfig and, therefore, was not covered by YANG modules. For example, EVPN was added only in June 2021. This leads to two important conclusions:

- You need to check, if OpenConfig is supported by your operating system; and if it is supported, which modules.

- You need to check content of the module, as it may be that vendor-specific implementation of OpenConfig deviates from the official one.

NAPALM vs OpenConfig Comparison

Let’s bring the main pieces altogether in a single table to compare them:

| NAPALM | OpenConfig | |

|---|---|---|

| Transport | – Per platform specific. – Relies in many cases on legacy APIs | – NETCONF – RESTCONF – GNMI |

| Coverage | Covers a limited, but useful, functionality in terms of vendor-normalised data collection. Allows execution of arbitrary CLI commands. | Covers predominantly connectivity use cases. However, it is being actively developed and new scenarios are being added. |

| Supported NOS | 5 core: – Cisco IOS/IOS XR/NX-OS – Juniper JUNOS – Arista EOS A number of community drivers for other OS | Almost all modern NOS support OpenConfig in some capacity: – Cisco IOS/IOS XR/NX-OS – Juniper JUNOS – Arista EOS – Nokia SR OS – Huawei |

| Complexity | The complexity is in the library itself. The network devices doesn’t have to support any particular extra features. | The complexity is moved down to network devices: OpenConfig YANG modules must be implemented in NOS. |

| Benefits | – Existing in the market for quite a while. – Suitable for not-newest NOS – Normalises user experience across different NOS | – Represents the future-proof Model-Driven Automation – Implements same data models on different vendors -> same data – Easy to use if implemented – Driven by Google and highly adopted by other truly big networks |

| Drawbacks | – Limited amount of defined data sets for multivendor networks – For doing more advanced configuration, requires still to stick to vendor-dependent configuration, which moves us away from multivendorness | – Coverage varies between vendors and even versions of NOS – May be impossible to implement desired configuration |

Lessons Learned

The last time I was looking into NAPALM a while ago, when there was not that many community created modules. Therefore, the usage of NAPALM especially in service provider networks, which are often built using Nokia SR OS routers, was relatively limited. Currently, with those additions, there is a possibility to use the NAPALM in mixed setup with Nokia SR OS (and even Nokia SR Linux), which is indeed a good move.

Conclusion

What does it mean at all? Is one technology better than another? Not necessary. Both of these technologies have their own benefits and drawbacks. At the same time, per our opinion, with further development and adopting of the model-driven technologies, i.e. automation based on YANG models with NETCONF, RESTCONF, and GNMI, we believe that OpenConfig is a more future-proof approach. At the same time, it may be transformed from being OpenConfig, which is not IETF standardised, in some RFC-based set of YANG models. In fact, this process is already started. In future blogpost, we’ll show you the comparison between NAPALM and OpenConfig in action. Take care and good bye.

Support us

P.S.

If you have further questions or you need help with your networks, we are happy to assist you, just send us a message. Also don’t forget to share the article on your social media, if you like it.

BR,

Anton Karneliuk