Hello my friend,

The Christmas and New Year is coming, so it is typically the time to think about the future and plan ahead. And today we look into future by reviewing the new network operation system deployed in the data centres hosting Microsoft Azure clouds. This operation system is called SONiC: Software for Open Networking in the Cloud.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

Discalimer

If you even more interested in your future, join our network automation training we start soon, where we cover the details of the data modelling, NETCONF/YANG, GNMI, REST API, Python and Ansible in the multivendor environment with Cisco, Nokia, Arista and Cumulus Linux as network functions.

Thanks

Special thanks for Avi Alkobi from Mellanox for providing me the Mellanox SN2010 for tests and initial documentation for Microsoft Azure SONiC setup.

Brief description

Everyone talks about the clouds. You can hear about public clouds, private clouds, hybrid clouds, clouds interconnection and so on at each and every IT or network conference these days. The major focus is, obviously, ways how the customers can benefit from using clouds for their business. In this context the discussions about the public clouds are particularly popular. The next level of the cloud offering is a serverless applications. Here some output from Wikipedia:

Serverless computing is a cloud-computing execution model in which the cloud provider runs the server, and dynamically manages the allocation of machine resources. Pricing is based on the actual amount of resources consumed by an application, rather than on pre-purchased units of capacity.

The main driver for the companies is to pay less for their IT workload, as the intention is to pay only for used resources, not for a pre-allocated. On the other hand, the cloud service provider can resell its infrastructure more effectively getting higher level of the oversubscription on its infrastructure.

Despite the concept is called “serverless”, that only means that the customer doesn’t deal (or even care) about the servers. Servers, however, exist as they host customer applications; just they are managed by cloud services providers. And if servers exist, they must be somehow interconnected. This “somehow” is the core of our article today.

The three most popular public clouds are Amazon Web Services (AWS), Google Cloud Platform (GCP) or Microsoft Azure (Azure). And the network infrastructure of the public clouds is a way different comparing to the traditional data centres. The difference comes from the scale, as the public clouds are built across thousands of servers spanned by hundreds of switches per data centre. That is why the big public cloud providers (and some other big internet companies such as Facebook) are called hyperscalers. The following characterises are the most important for them:

- Price per port per Gbps. Despite the amount of money, the public cloud providers are making on the customers, they have an enormously huge number of servers/switches (and other infrastructure) they need to operate and maintain. That’s why the cost of network is tried to be kept as low as possible, what requires looking for somewhat different to traditional custom silicon (hardware) created by popular network vendors. This is why merchant silicon (such as Broadcom chipset) is very popular among the cloud service providers.

- Vendor-neutrality. Google created an OpenConfig YANG model to unify configuration of their data centre devices coming from multiple vendors.

We covered the OpenConfig in several articles and the GitHub project.

One of the biggest challenges of the network automation is to make it working in a multi-vendor environment, and Google solved (well, partially solved) this problem in a an elegant manner. Google required network vendors to deploy OpenConfig YANG models in the devices, if the vendors want the devices to be used in Goole’s network.

Learn more about multi-vendor network automation and OpenConfig at our network automation training. - Simplicity. The problem with complex technologies, such as EVPN/VXLAN is that it is hard to troubleshoot them, if they break (not saying that they require advanced capabilities of the switches silicon (hardware), what contradicts to the first point). That is why the public clouds network wise are built with little to no overlay. It doesn’t mean that there are no overlays at all, it just means that if overlays created, they are terminated directly on the hypervisors rather than on leaf (top of rack) switches, where all the network elements (leafs, spines, super-spines, borders) just route the traffic between the servers and from servers to Internet.

The idea of building data centre networks using eBGP fabric comes from hyperscalers.

There are much more requirements, but these three are enough from the point of view of this article. Because these three requirements were taken into account by Microsoft, when they developed their network operation system used on the network infrastructure in the data centres hosing Microsoft Azure cloud. This network operation system is called SONiC: Software for Open Networking In Clouds. There are plenty of plarforms, where you can install SONiC on, which are basically all the devices running ONIE. Among other you can find Broadcom, Barefoot and Mellanox.

Today we’ll cover the installation of Microsoft Azure SONiC on Mellanox SN2010 and necessary configurations to transform your environment in hyperscaler.

What are we going to test?

The following points are covered in this article:

- Installation of the Microsoft Azure SONiC on Mellanox SN 2010 running Cumulus Linux

- Configuration of the physical ports

- Configuration of the IP addresses

- Configuration of eBGP and peering with Nokia SR OS / Cisco IOS XR running devices.

Software version

The following software components are used in this lab.

Management host:

- CentOS 7.5.1804 with python 2.7.5

- Ansible 2.8.0

- Docker-CE 18.09

Enabler and monitoring infrastructure:

- Base Linux image for container: Alpine Linux 3.9

- DHCP: ISC DHCP 4.4.1-r2

- HTTP: NGINX 1.14.2-r1

The Data Centre Fabric:

- Mellanox SN 2010

- Microsoft Azure SONiC [on Mellanox SN 2010]

- Nokia VSR 19.10.R1 [guest VNF]

- Cisco IOS XRv 6.5.1 [guest VNF]

More details about Data Centre Fabric you may find in the previous articles.

Topology

Physical topology is exactly the same as it was earlier in all the articles covering Mellanox SN 2010 labs:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| |

| /\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\ |

| / Docker cloud \ |

| (c)karneliuk.com / +------+ \ |

| \ +---+ DHCP | / |

| Mellanox/Cumulus lab / | .2+------+ \ |

| \ | / |

| \ 172.17.0.0/16 | +------+ \ |

| +-----------+ \+------------+ +---+ DNS | / |

| | | | +----+ .3+------+ \ |

| | Mellanox | 169.254.255.0/24 | Management |.1 | / |

| | SN2010 | fc00:de:1:ffff::/64 | host | | +------+ \ |

| | | | | +---+ FTP | / |

| | mlx-cl | eth0 enp2s0f1 | carrier |\ | .4+------+ \ |

| | | .21 .1 | | \ | / |

| | | :21 :1 | +------+ | / | +------+ \ |

| | +------------------------+ br0 | | \ +---+ HTTP | / |

| | | | +------+ | \ .5+------+ \ |

| | | | | \ / |

| | | swp1 ens2f0 | +------+ | \/\/\/\/\/\/\/ |

| | +------------------------+ br1 | | |

| | | | +------+ | |

| | | | | |

| | | swp7 ens2f1 | +------+ | |

| | +------------------------+ br2 | | |

| | | | +------+ | |

| | | | | |

| +-----------+ +------------+ |

| |

+-----------------------------------------------------------------------+

The details about setting up the Mellanox SN 2010 using ZTP infrastructure are provided in a separate article.

The logic topology is almost the same as was used for Segment Routing in Open Network with Mellanox and Cumulus, where the MS Azure SONiC will act instead of Cumulus:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| |

| +---------+ 169.254.0.0/31 +-----------+ 169.254.0.2/31 +---------+ |

| | | | | | | |

| | SR1 +----------------+ mlx-sonic +----------------+ XR1 | |

| |AS:65001 | .0 .1 | AS:65002 | .2 .3 |AS:65003 | |

| +----+----+ <---eBGP-LU--> +-----+-----+ <---eBGP-LU--> +----+----+ |

| | | | |

| +++ +++ +++ |

| system lo Lo0 |

| IPv4:10.0.0.1/32 IPv4:10.0.0.2/32 IPv4:10.0.0.3/32 |

| |

| <-----------------------eBGP-VPNV4----------------------> |

| |

| Microsoft Azure SONiC in DC |

| (c) karneliuk.com |

| |

+------------------------------------------------------------------------------+

If you want to learn more about server lab setup, refer to the corresponding article.

As you can see, we are plan to configure quite a standard spine role, which is also almost the same as leaf role in the hyper scaler environment, with the difference that the server can be connected as a host-prefix (like /31 for IPv4) rather than using BGP, though it might be connected using BGP as well.

You can find the topology and the relevant files on my GitHub page.

Installing Microsoft Azure SONiC on a Mellanox switch running another NOS

The beauty (and in a certain sense the challenge) of the Open Networking and disaggregation in the networks is that you need to install the operation system (called network operation system – NOS) on the device. It is a bit different to the legacy approach, where you have some sort of the vendor NOS installed by the date the device is shipped to you, and all you need is to update the SW.

In the Open Networking, the switch typically is shipped only with ONIE (Open Network Install Environment) on board, which is a basic Linux distributive, and you need to install the NOS yourself.

In the official documentation you can find an explanation how to do that.

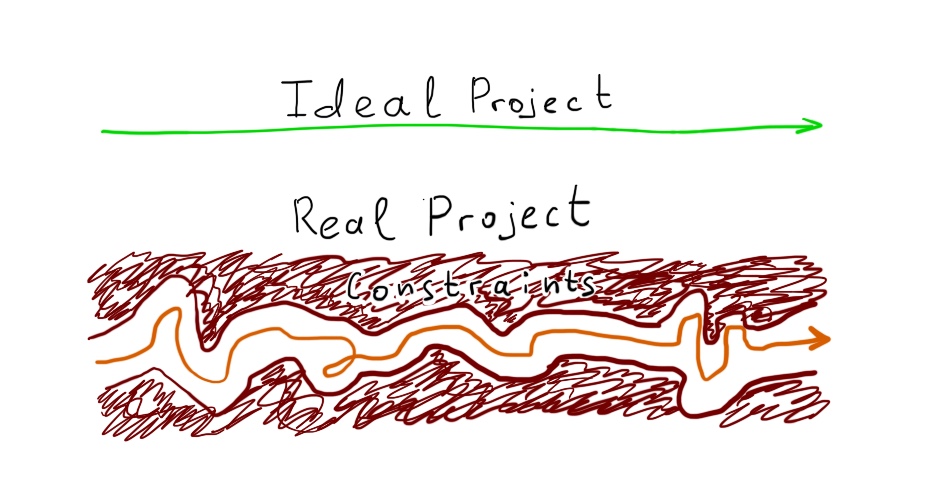

However, this blogpost is a continuation of the previous series about Mellanox, where Cumulus Linux was already installed. Therefore, I have some challenges making my case more complicated, which I need to solve:

- I don’t have pure ONIE, so I need to uninstall existing NOS (Cumulus Linux).

- Once I get clear ONIE, I need to install MS Azure SONiC.

- I don’t have a serial console access to the switch (as I don’t have a cable), so whatever I’m doing must be in some form automated to allow me work without serial port and get device straight to the state, where SSH is available

There is no creativity without restrictions.

OK, challenge is accepted. So, we start analysis of our infrastructure by checking, how we can meet these criteria. As you might recall, in the beginning of the setup with Mellanox SN2010, we built a zero-touch provisioning using DNS/DHCP stack. We’ll follow the same approach here, so we modify the dhcpd.conf file by pointing towards Microsoft Azure SONiC image using default-url DCHP option:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

# dhcpd.conf

# private DHCP options

option cumulus-provision-url code 239 = text;

default-lease-time 600;

max-lease-time 7200;

authoritative;

# IPv4 OOB management subnet for the data center

subnet 169.254.255.0 netmask 255.255.255.0 {

range 169.254.255.129 169.254.255.254;

option domain-name-servers 8.8.8.8;

option routers 169.254.255.1;

option broadcast-address 169.254.255.255;

}

# IPv4 OOB // Fixed lease

host mlx-cl {

hardware ethernet b8:59:9f:09:99:6c;

fixed-address 169.254.255.21;

option host-name "mlx-sonic";

option default-url "http://169.254.255.1/images/sonic-mellanox.bin";

}

Download SONiC image per your requirements freely at official GitHub repo.

Once the configuration file of the DHCP server is modified, we need to restart the appropriate Docker container:

The details of the DHCP Docker container you may find in the corresponding article.

At this point, we are prepared to boot the switch with SONiC, once we get the ONIE on our Mellanox SN 2010. That’s why we can start with uninstalling the Cumulus Linux, which we have on our device now:

2

3

4

5

6

7

8

9

10

11

WARNING:

WARNING: ONIE uninstall mode requested.

WARNING: This will wipe out all system data.

WARNING: Make sure to back up your data.

WARNING:

Are you sure (y/N)? y

Enabling ONIE uninstall mode at next reboot...done.

Reboot required to take effect.

cumulus@mlx-cl:mgmt-vrf:~$ sudo reboot

Connection to 169.254.255.21 closed by remote host.

In the documentation it is said that complete uninstall may take up to 30 minutes on the Mellanox switches. According to the observations I had, it took me about 10 minutes, perhaps, to get the clean ONIE environment on Cumulus Linux.

Once the switch is rebooted, we are getting into ONIE CLI:

2

ONIE:~ #

There are multiple ONIE boot modes, and to be able to install the new network operation system, we must be in install or rescue mode. So we switch it:

Once the switch is rebooted, we are getting into ONIE CLI:

At this point, our Mellanox SN 2010 switch reboots one more time and it get to the proper mode, so that we can install Microsoft Azure SONiC now:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

Notice: Invalid TLV header found. Using default contents.

Notice: Invalid TLV checksum found. Using default contents.

Info: Attempting http://169.254.255.1/images/sonic-mellanox.bin ...

Connecting to 169.254.255.1 (169.254.255.1:80)

installer 100% |*************************************************************| 748M 0:00:00 ETA

Verifying image checksum ...

Verifying image checksum ... OK.

Preparing image archive ... OK.

Installing SONiC in ONIE

ONIE Installer: platform: x86_64-mellanox-r0

onie_platform: x86_64-mlnx_msn2010-r0

deleting partition 3 ...

Filesystem 1K-blocks Used Available Use% Mounted on

Warning: The kernel is still using the old partition table.

The new table will be used at the next reboot.

The operation has completed successfully.

Partition #1 is in use.

Partition #2 is in use.

Partition #3 is available

Creating new SONiC-OS partition /dev/sda3 ...

Could not create partition 3 from 268288 to 67377151

Unable to set partition 3's name to 'SONiC-OS'!

Error encountered; not saving changes.

Warning: The first trial of creating partition failed, trying the largest aligned available block of sectors on the disk

Warning: The kernel is still using the old partition table.

The new table will be used at the next reboot.

The operation has completed successfully.

mke2fs 1.42.13 (17-May-2015)

Discarding device blocks: done

Creating filesystem with 3876113 4k blocks and 969136 inodes

Filesystem UUID: 5b70dea5-1a10-45f1-b01f-5c092d1ef4aa

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Installing SONiC to /tmp/tmp.RLZA6l/image-HEAD.141-3853b31f

Archive: fs.zip

creating: boot/

inflating: boot/initrd.img-4.9.0-9-2-amd64

inflating: boot/System.map-4.9.0-9-2-amd64

inflating: boot/config-4.9.0-9-2-amd64

inflating: boot/vmlinuz-4.9.0-9-2-amd64

creating: platform/

creating: platform/x86_64-grub/

inflating: platform/x86_64-grub/grub-pc-bin_2.02~beta3-5+deb9u2_amd64.deb

inflating: platform/firsttime

inflating: fs.squashfs

Success: Support tarball created: /tmp/onie-support-mlnx_msn2010.tar.bz2

Installing for i386-pc platform.

Installation finished. No error reported.

Installed SONiC base image SONiC-OS successfully

ONIE:~ # Connection to 169.254.255.21 closed by remote host.

Connection to 169.254.255.21 closed.

It looks like we have some issues with default-url in the DHCP server, so we will troubleshoot it the next time.

So far, the Microsoft Azure SONiC is installed and after the reboot we are getting into its prompt:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

The authenticity of host '169.254.255.21 (169.254.255.21)' can't be established.

RSA key fingerprint is SHA256:7STt8e6NhYg+5TaaOXgKvQS+fXjBHS13OAUl8w0Ggic.

RSA key fingerprint is MD5:e3:21:99:24:b6:c6:0e:c0:af:52:34:35:e1:e8:de:1e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '169.254.255.21' (RSA) to the list of known hosts.

admin@169.254.255.21's password:

Linux mlx-cl 4.9.0-9-2-amd64 #1 SMP Debian 4.9.168-1+deb9u5 (2015-12-19) x86_64

You are on

____ ___ _ _ _ ____

/ ___| / _ \| \ | (_)/ ___|

\___ \| | | | \| | | |

___) | |_| | |\ | | |___

|____/ \___/|_| \_|_|\____|

-- Software for Open Networking in the Cloud --

Unauthorized access and/or use are prohibited.

All access and/or use are subject to monitoring.

Help: http://azure.github.io/SONiC/

admin@mlx-sonic:~$

The first step is accomplished and we can go towards verification and configuration.

Initial verification

Microsoft Azure SONiC is a Debian Linux with some additional stuff. Being Linux, SONiC has all the Linux tools available, much in the way Cumulus have. However, it has also its own CLI, which is available directly out of BASH, so you have some show commands, which are so liked by networkers. The first one you will use is the following:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

Warning: failed to retrieve PORT table from ConfigDB!

SONiC Software Version: SONiC.HEAD.141-3853b31f

Distribution: Debian 9.11

Kernel: 4.9.0-9-2-amd64

Build commit: 3853b31f

Build date: Wed Dec 4 05:54:05 UTC 2019

Built by: johnar@jenkins-worker-8

Platform: x86_64-mlnx_msn2010-r0

HwSKU: ACS-MSN2010

ASIC: mellanox

Serial Number: MT1915X01130

Uptime: 04:45:19 up 10 min, 1 user, load average: 3.13, 2.82, 1.63

Docker images:

REPOSITORY TAG IMAGE ID SIZE

docker-syncd-mlnx HEAD.141-3853b31f 26c494a3f407 373MB

docker-syncd-mlnx latest 26c494a3f407 373MB

docker-platform-monitor HEAD.141-3853b31f b3669e2a2520 565MB

docker-platform-monitor latest b3669e2a2520 565MB

docker-fpm-frr HEAD.141-3853b31f f4540389a162 321MB

docker-fpm-frr latest f4540389a162 321MB

docker-sflow HEAD.141-3853b31f 35f99a32ceb5 305MB

docker-sflow latest 35f99a32ceb5 305MB

docker-lldp-sv2 HEAD.141-3853b31f e7cb13694b80 299MB

docker-lldp-sv2 latest e7cb13694b80 299MB

docker-dhcp-relay HEAD.141-3853b31f eb1ccaadaecc 289MB

docker-dhcp-relay latest eb1ccaadaecc 289MB

docker-database HEAD.141-3853b31f dc465dabd6f4 281MB

docker-database latest dc465dabd6f4 281MB

docker-teamd HEAD.141-3853b31f 654834dc4537 304MB

docker-teamd latest 654834dc4537 304MB

docker-snmp-sv2 HEAD.141-3853b31f 5033fe225203 335MB

docker-snmp-sv2 latest 5033fe225203 335MB

docker-orchagent HEAD.141-3853b31f 1abb247a6bfc 322MB

docker-orchagent latest 1abb247a6bfc 322MB

docker-sonic-telemetry HEAD.141-3853b31f b0ac8274cb72 304MB

docker-sonic-telemetry latest b0ac8274cb72 304MB

docker-router-advertiser HEAD.141-3853b31f 39e4e55a743f 281MB

docker-router-advertiser latest 39e4e55a743f 281MB

In the beginning of the output you can see the details of the underlying Debian Linux, as well as hardware where you install Microsoft Azure SONiC. The second part of the output is even more intriguing, as you may see plenty of Docker images.

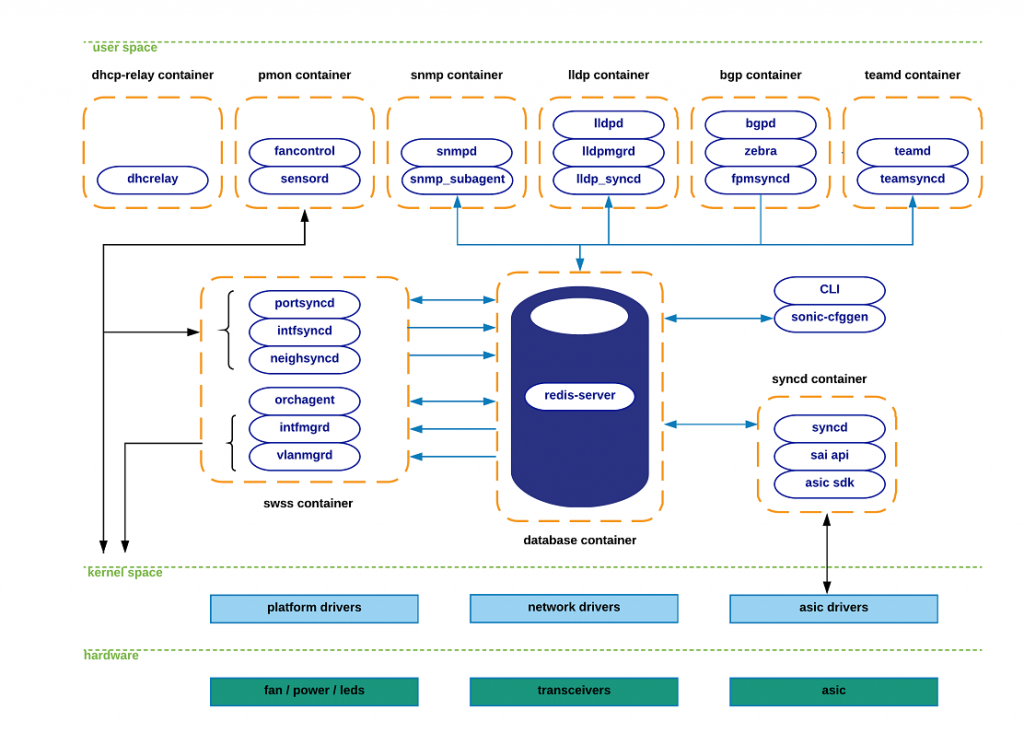

The reason for that is the architecture of the SONiC software, where the vast majority of functions are offloaded out of the Debian Linux to the Docker containers. The following image explains the SONiC architecture:

As you see, there are multiple containers, which are interacting mainly with the database container, and some are also interacting with the Linux process in the kernel.

As all the containers are running on Docker, we can verify their status using the Docker techniques:

2

3

4

5

6

7

8

9

10

11

12

13

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3a7b96bda295 docker-router-advertiser:latest "/usr/bin/supervisord" 10 minutes ago Up 9 minutes radv

0246271b29eb docker-sflow:latest "/usr/bin/supervisord" 10 minutes ago Up 9 minutes sflow

e9fa12c6b7a5 docker-platform-monitor:latest "/usr/bin/docker_ini…" 10 minutes ago Up 9 minutes pmon

bf3f7471dc2c docker-snmp-sv2:latest "/usr/bin/supervisord" 10 minutes ago Up 9 minutes snmp

ca76acb82c39 docker-lldp-sv2:latest "/usr/bin/supervisord" 10 minutes ago Up 10 minutes lldp

6dfcef72194f docker-dhcp-relay:latest "/usr/bin/docker_ini…" 10 minutes ago Up 9 minutes dhcp_relay

226b90f9e007 docker-syncd-mlnx:latest "/usr/bin/supervisord" 10 minutes ago Up 9 minutes syncd

08c6de3cd38a docker-teamd:latest "/usr/bin/supervisord" 11 minutes ago Up 9 minutes teamd

5becc751a893 docker-orchagent:latest "/usr/bin/supervisord" 12 minutes ago Up 9 minutes swss

2c0e620f1eae docker-fpm-frr:latest "/usr/bin/supervisord" 12 minutes ago Up 12 minutes bgp

576d954278a5 docker-database:latest "/usr/local/bin/dock…" 12 minutes ago Up 12 minutes database

All the Docker images listed in the output of the show version command, have active instances, which means that the operationally Docker is so far correct.

Going back to the kernel space, there are some process (besides Docker, obviously), which we may check. The following is the process related to the hardware management, so it should be up and in error-free state:

2

3

4

5

6

7

8

9

10

11

● hw-management.service - Thermal control and chassis management for Mellanox systems

Loaded: loaded (/lib/systemd/system/hw-management.service; disabled; vendor preset: enabled)

Active: active (exited) since Thu 2019-10-17 04:34:33 UTC; 16min ago

Main PID: 493 (code=exited, status=0/SUCCESS)

Tasks: 2 (limit: 4915)

Memory: 220.6M

CPU: 3.802s

CGroup: /system.slice/hw-management.service

├─ 1682 /bin/bash /usr/bin/hw-management-thermal-control.sh 4 4 0

└─17567 /bin/sleep 60

The last, but not least, is to check the HW details, which we are covered partially by other commands:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

TlvInfo Header:

Id String: TlvInfo

Version: 1

Total Length: 597

TLV Name Code Len Value

-------------------- ---- --- -----

Product Name 0x21 64 MSN2010

Part Number 0x22 20 MSN2010-CB2FC

Serial Number 0x23 24 MT1915X01130

Base MAC Address 0x24 6 B8:59:9F:62:46:80

Manufacture Date 0x25 19 05/06/2019 12:48:58

Device Version 0x26 1 16

MAC Addresses 0x2A 2 128

Manufacturer 0x2B 8 Mellanox

Vendor Extension 0xFD 36

Vendor Extension 0xFD 164

Vendor Extension 0xFD 36

Vendor Extension 0xFD 36

Vendor Extension 0xFD 36

Vendor Extension 0xFD 20

Platform Name 0x28 64 x86_64-mlnx_msn2010-r

ONIE Version 0x29 23 2019.02-5.2.0010-115200

CRC-32 0xFE 4 0xDAD3FE06

(checksum valid)

Once more we see the details about the platform, what are the product and part numbers, what are the base MAC address and some others. Among others, the Platform Name is very important, as we will use its value later in this article.

So far, the initial verification is done, and we can start doing the configuration of our Mellanox switch running MS Azure SONiC.

Configuration of Microsoft Azure SONiC

The major part of the SONiC’s configuration is contained in the specific file: /etc/sonic/config_db.json. Therefore, the configuration process is the modification of this file (as the changes there are persistent) and reapplying information out of this configuration file to the operational state.

The details of the configuration in terms what you can or cannot modify you can find in the official documentation.

Let’s take a look into that configuration file:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

{

"CRM": {

"Config": {

"acl_counter_high_threshold": "85",

"acl_counter_low_threshold": "70",

"acl_counter_threshold_type": "percentage",

"acl_entry_high_threshold": "85",

"acl_entry_low_threshold": "70",

"acl_entry_threshold_type": "percentage",

"acl_group_high_threshold": "85",

"acl_group_low_threshold": "70",

"acl_group_threshold_type": "percentage",

"acl_table_high_threshold": "85",

"acl_table_low_threshold": "70",

"acl_table_threshold_type": "percentage",

"fdb_entry_high_threshold": "85",

"fdb_entry_low_threshold": "70",

"fdb_entry_threshold_type": "percentage",

"ipv4_neighbor_high_threshold": "85",

"ipv4_neighbor_low_threshold": "70",

"ipv4_neighbor_threshold_type": "percentage",

"ipv4_nexthop_high_threshold": "85",

"ipv4_nexthop_low_threshold": "70",

"ipv4_nexthop_threshold_type": "percentage",

"ipv4_route_high_threshold": "85",

"ipv4_route_low_threshold": "70",

"ipv4_route_threshold_type": "percentage",

"ipv6_neighbor_high_threshold": "85",

"ipv6_neighbor_low_threshold": "70",

"ipv6_neighbor_threshold_type": "percentage",

"ipv6_nexthop_high_threshold": "85",

"ipv6_nexthop_low_threshold": "70",

"ipv6_nexthop_threshold_type": "percentage",

"ipv6_route_high_threshold": "85",

"ipv6_route_low_threshold": "70",

"ipv6_route_threshold_type": "percentage",

"nexthop_group_high_threshold": "85",

"nexthop_group_low_threshold": "70",

"nexthop_group_member_high_threshold": "85",

"nexthop_group_member_low_threshold": "70",

"nexthop_group_member_threshold_type": "percentage",

"nexthop_group_threshold_type": "percentage",

"polling_interval": "300"

}

},

"DEVICE_METADATA": {

"localhost": {

"default_bgp_status": "up",

"default_pfcwd_status": "disable",

"hostname": "mlx-sonic",

"hwsku": "ACS-MSN2010",

"mac": "b8:59:9f:62:46:80",

"platform": "x86_64-mlnx_msn2010-r0",

"type": "LeafRouter"

}

}

}

That is one of the beauties of the Microsoft Azure SONiC: almost the whole configuration is a single JSON file, which can be easily templated using Anisble or Python. Not sure, that you will want to change the parameters of the CRP part, but the information in DEVICE_METADATA you will change upon the device’s configuration.

The topic of the configuration’s tasks for this article is the following:

- Configuration of the ports (physical instances).

- Configuration of the IP addresses associated with the ports (logical instances).

- Configuration of the external BGP for the data centre fabric.

Let’s kick off with the first task.

#1. Ports

At a glance, you might think: “what is the point of the configuration of the ports?” Even if this is any sort of Linux, we have covered that previously. Well, the reality is way more complex.

The reason for that is the abstraction of the interfaces’ names in the SONiC. Their names depend on the platform and the interfaces speed. Previously we have pointed out that we need to know the name of our hardware platform, which is x86_64-mlnx_msn2010-r0 for our Mellanox SN 2010 switch. Knowing the name of the platform, we can find the relevant port mapping on the platform:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# name lanes index

Ethernet0 0 0

Ethernet4 4 1

Ethernet8 8 2

Ethernet12 12 3

Ethernet16 16 4

Ethernet20 20 5

Ethernet24 24 6

Ethernet28 28 7

Ethernet32 32 8

Ethernet36 36 9

Ethernet40 40 10

Ethernet44 44 11

Ethernet48 48 12

Ethernet52 52 13

Ethernet56 56 14

Ethernet60 60 15

Ethernet64 64 16

Ethernet68 68 17

Ethernet72 72,73,74,75 18

Ethernet76 76,77,78,79 19

Ethernet80 80,81,82,83 20

Ethernet84 84,85,86,87 21

Each device has its own port mapping, so check that in the corresponding folder.

The index column helps you understand, what is the proper interface name for your port. As we plan to use the physical ports 1 and 7 per the physical topology above, we need to find the corresponding indexes, which will be mapped to the interfaces’ names. The management interface (eth0) is however not reflected here, so the index 0 identifies the first data plane port (swp1). Therefore, the interfaces we need to configure are Ethernet0 and Ethernet24.

Lanes are the lanes of the ASIC, which are connected to each port. Therefore, the SONiC is compiled to each platform separately.

Having figured out, what are the ports’ names we are going to use, we can modify the config_db.json accordingly:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

{

! OUTPUT IS OMITTED

"PORT": {

"Ethernet0": {

"admin_status": "up",

"alias": "Ethernet1",

"lanes": "4",

"mtu": "9216",

"speed": "10000"

},

"Ethernet24": {

"admin_status": "up",

"alias": "Ethernet7",

"lanes": "24",

"mtu": "9216",

"speed": "10000"

}

}

}

We just add the new container PORT after the DEVICE_METADATA using the guidelines from the official documentation. As you see, we add create the containers for each interface, we are going to use, and add the parameters we are interested in. The lanes key is mandatory, and the rest are optional.

The file config_db.json is a storage of the configuration, which is the persistent across the SW reboots. However, the changes of this file aren’t applied automatically for the current boot, so you need to force that using the following command:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

Stopping service swss ...

Stopping service lldp ...

Stopping service bgp ...

Stopping service hostcfgd ...

Running command: /usr/local/bin/sonic-cfggen -j /etc/sonic/config_db.json --write-to-db

Resetting failed status for service bgp ...

Resetting failed status for service dhcp_relay ...

Resetting failed status for service hostcfgd ...

Resetting failed status for service hostname-config ...

Resetting failed status for service interfaces-config ...

Resetting failed status for service lldp ...

Resetting failed status for service ntp-config ...

Resetting failed status for service pmon ...

Resetting failed status for service radv ...

Resetting failed status for service rsyslog-config ...

Resetting failed status for service snmp ...

Resetting failed status for service swss ...

Resetting failed status for service syncd ...

Resetting failed status for service teamd ...

Restarting service hostname-config ...

Restarting service interfaces-config ...

Restarting service ntp-config ...

Restarting service rsyslog-config ...

Restarting service swss ...

Restarting service bgp ...

Restarting service lldp ...

Restarting service hostcfgd ...

Restarting service sflow ...

As you see from the log, all the Docker containers with the various services are restarted during the execution of this command.

Once the configuration is reapplied, you may find the added interfaces using the standard Linux commands:

2

3

4

5

6

! SOME TEXT IS TRUNCATED

107: Ethernet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9216 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether b8:59:9f:62:46:80 brd ff:ff:ff:ff:ff:ff

108: Ethernet24: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9216 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether b8:59:9f:62:46:80 brd ff:ff:ff:ff:ff:ff

Hurray! The first quiz is solved, so we are heading the next one.

#2. IP addresses

The configuration of the IP addresses, we’ll split into two parts:

- Configuration of the IP addresses on the interface Loopback

- Configuration of the IP addresses on the data plane interface

To configure IP addresses on the loopback interface, we need to add new container in the config_db.json, which is called LOOPBACK_INTERFACE:

2

3

4

5

6

7

8

9

10

11

{

! OUTPUT is TRUNCATED

"LOOPBACK_INTERFACE": {

"Loopback0|10.0.0.2/32": {},

"Loopback0|fc00::10:0:0:2/128": {}

}

}

admin@mlx-cl:~$ sudo config reload -y

If you want the changed configuration to be applied in Microsoft Azure SONiC, don’t forget to run “sudo config reload -y” after the change of the config_db.json file.

If you are familiar with JSON syntax, you see that we create the new containers for each IP address despite that interface is the same, where the name of the container consists both of the interface’s name and its IP address.

If you aren’t familiar with JSON, join our network automation training.

Once the configuration is reapplied, we can visually check the changes in the list of the interfaces’ IP addresses:

2

3

4

5

6

7

8

9

10

11

12

13

133: Loopback0: <BROADCAST,NOARP,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f2:0d:99:d2:57:35 brd ff:ff:ff:ff:ff:ff

inet 10.0.255.11/32 scope global Loopback0

valid_lft forever preferred_lft forever

inet 10.0.0.2/32 scope global Loopback0

valid_lft forever preferred_lft forever

inet6 fc00::10:0:0:2/128 scope global

valid_lft forever preferred_lft forever

inet6 fc00::10:0:255:11/128 scope global

valid_lft forever preferred_lft forever

inet6 fe80::f00d:99ff:fed2:5735/64 scope link

valid_lft forever preferred_lft forever

And try to verify its reachability:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.060 ms

64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.066 ms

64 bytes from 10.0.0.2: icmp_seq=3 ttl=64 time=0.063 ms

--- 10.0.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2027ms

rtt min/avg/max/mdev = 0.060/0.063/0.066/0.002 ms

admin@mlx-sonic:~$ ping6 fc00::10:0:0:2 -c 3

PING fc00::10:0:0:2(fc00::10:0:0:2) 56 data bytes

64 bytes from fc00::10:0:0:2: icmp_seq=1 ttl=64 time=0.086 ms

64 bytes from fc00::10:0:0:2: icmp_seq=2 ttl=64 time=0.109 ms

64 bytes from fc00::10:0:0:2: icmp_seq=3 ttl=64 time=0.110 ms

--- fc00::10:0:0:2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2045ms

rtt min/avg/max/mdev = 0.086/0.101/0.110/0.016 ms

The next stop in our journey is the configuration of the IP addresses on the physical interfaces, which we have so far configured in the previous paragraph. This achieved in the same way we’ve just done it for Loopbacks, but using another key INTERFACE:

2

3

4

5

6

7

8

9

10

11

{

! OUTPUT is TRUNCATED

"INTERFACE": {

"Ethernet0|169.254.0.1/31": {},

"Ethernet24|169.254.0.2/31": {},

}

}

admin@mlx-sonic:~$ sudo config reload -y

Germans say “Reboot tut gut”, so run “sudo config reload -y” after the change of the config_db.json file.

In reality, we might have merged the configuration of the IP addresses for the loopbacks and physical interfaces. However, as we use different keys for that, I decided to split that. After the reload of the configuration, we can see that the interfaces created earlier have the IP addresses now:

2

3

4

5

6

7

8

9

10

11

12

13

14

189: Ethernet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9216 qdisc pfifo_fast state UP group default qlen 1000

link/ether b8:59:9f:62:46:80 brd ff:ff:ff:ff:ff:ff

inet 169.254.0.1/31 scope global Ethernet0

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe62:4680/64 scope link

valid_lft forever preferred_lft forever

admin@mlx-sonic:~$ ip addr show Ethernet24

190: Ethernet24: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9216 qdisc pfifo_fast state UP group default qlen 1000

link/ether b8:59:9f:62:46:80 brd ff:ff:ff:ff:ff:ff

inet 169.254.0.2/31 scope global Ethernet24

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe62:4680/64 scope link

valid_lft forever preferred_lft forever

The interesting observation I made with the SONiC, is that it changes the ID of the interfaces after the config reload. E.g. Ethernet24 had initially ID 108, and it is 190 after couple of reloads.

The next moment is a moment of truth for our Mellanox SN 2010 switch running Microsoft Azure SONiC in this setup, as we try to reach our supporting network functions based on Nokia SR OS and Cisco IOS XR operating systems per our logical topology above:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

PING 169.254.0.0 (169.254.0.0) 56(84) bytes of data.

64 bytes from 169.254.0.0: icmp_seq=1 ttl=64 time=2.86 ms

--- 169.254.0.0 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.869/2.869/2.869/0.000 ms

admin@mlx-sonic:~$ ping 169.254.0.1 -c 1

PING 169.254.0.1 (169.254.0.1) 56(84) bytes of data.

64 bytes from 169.254.0.1: icmp_seq=1 ttl=64 time=0.071 ms

--- 169.254.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.071/0.071/0.071/0.000 ms

admin@mlx-sonic:~$ ping 169.254.0.2 -c 1

PING 169.254.0.2 (169.254.0.2) 56(84) bytes of data.

64 bytes from 169.254.0.2: icmp_seq=1 ttl=64 time=0.067 ms

--- 169.254.0.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.067/0.067/0.067/0.000 ms

admin@mlx-sonic:~$ ping 169.254.0.3 -c 1

PING 169.254.0.3 (169.254.0.3) 56(84) bytes of data.

64 bytes from 169.254.0.3: icmp_seq=1 ttl=255 time=4.93 ms

--- 169.254.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 4.937/4.937/4.937/0.000 ms

The per-link reachability is achieved, so we are heading to BGP section to allow end-to-end reachability.

#3. BGP

The third step in our Microsoft Azure SONiC configuration journey is the BGP to build the fabric. We’ll try to build the easiest form of the BGP, which is IPv4 unicast. Being the easiest one, it is one of the most used forms for the build of the data centres’ fabrics.

To configure the BGP, I tried to follow the official configuration example and did the following config:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

{

! OUTPUT is TRUNCATED

"DEVICE_METADATA": {

"localhost": {

"default_bgp_status": "up",

"bgp_asn": 65002,

! OUTPUT is TRUNCATED

"BGP_NEIGHBOR": {

"169.254.0.0": {

"local_addr": "169.254.0.1",

"asn": 65001,

"name": "SR1"

},

"169.254.0.3": {

"local_addr": "169.254.0.2",

"asn": 65003,

"name": "XR1"

}

}

}

admin@mlx-sonic:~$ sudo config reload -y

But after the reapplying the configuration, I see the awkward output:

2

% No BGP neighbors found

In the documentation I’ve read that previously the Quagga was used in the routing daemon, but recently (starting from SONiC 4.* version) it was replaced by FRR. Despite being fork of Quagga, it is a separate product and separate daemon. So I have suspicions, though I must be mistaken, that the issue of not working BGP configuration in config_db.json, is related to it.

As we have FRR, we can take a look into its configuration using vtysh tool:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

mlx-sonic# show run

Building configuration...

Current configuration:

!

frr version 7.2-sonic

frr defaults traditional

hostname mlx-sonic

log syslog informational

log facility local4

agentx

no service integrated-vtysh-config

!

enable password zebra

password zebra

!

router bgp 65002

bgp router-id 10.0.0.2

bgp log-neighbor-changes

no bgp default ipv4-unicast

bgp graceful-restart restart-time 240

bgp graceful-restart

bgp bestpath as-path multipath-relax

neighbor PEER_V4 peer-group

neighbor PEER_V6 peer-group

!

address-family ipv4 unicast

network 10.0.0.2/32

neighbor PEER_V4 soft-reconfiguration inbound

neighbor PEER_V4 route-map TO_BGP_PEER_V4 out

maximum-paths 64

exit-address-family

!

address-family ipv6 unicast

network fc00::/64

neighbor PEER_V6 soft-reconfiguration inbound

neighbor PEER_V6 route-map TO_BGP_PEER_V6 out

maximum-paths 64

exit-address-family

!

ip prefix-list PL_LoopbackV4 seq 5 permit 10.0.0.2/32

!

ipv6 prefix-list PL_LoopbackV6 seq 5 permit fc00::/64

!

route-map FROM_BGP_SPEAKER_V4 permit 10

!

route-map ISOLATE permit 10

set as-path prepend 65002

!

route-map RM_SET_SRC permit 10

set src 10.0.0.2

!

route-map RM_SET_SRC6 permit 10

set src fc00::10:0:0:2

!

route-map TO_BGP_PEER_V4 permit 100

!

route-map TO_BGP_PEER_V6 permit 100

!

route-map TO_BGP_SPEAKER_V4 deny 10

!

route-map set-next-hop-global-v6 permit 10

set ipv6 next-hop prefer-global

!

ip protocol bgp route-map RM_SET_SRC

!

ipv6 protocol bgp route-map RM_SET_SRC6

!

line vty

!

end

The configuration seems correct as even the proper IPv4 and IPv6 addresses of the loopbacks are going to be advertised. However, there is one thing missing, which is the BGP peers. There are created peering groups both for IPv4 and IPv6, but they have no peers inside. That is the root cause of our failed BGP session.

And the solution for it would be adding of the BGP peers to the groups and activating the groups:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

configure terminal

!

router bgp 65002

neighbor 169.254.0.0 remote-as 65001

neighbor 169.254.0.0 peer-group PEER_V4

neighbor 169.254.0.3 remote-as 65003

neighbor 169.254.0.3 peer-group PEER_V4

!

address-family ipv4 unicast

neighbor PEER_V4 activate

exit-address-family

!

address-family ipv6 unicast

neighbor PEER_V6 activate

exit-address-family

!

end

mlx-sonic# copy running-config startup-config

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Configuration saved to /etc/frr/zebra.conf

Configuration saved to /etc/frr/bgpd.conf

Configuration saved to /etc/frr/staticd.conf

mlx-sonic# exit

admin@mlx-sonic:~$

When this configuration is done, we see that our BGP sessions are coming up:

2

3

4

5

6

7

8

9

10

11

12

13

14

IPv4 Unicast Summary:

BGP router identifier 10.0.0.2, local AS number 65002 vrf-id 0

BGP table version 3

RIB entries 5, using 920 bytes of memory

Peers 2, using 41 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

169.254.0.0 4 65001 7 5 0 0 0 00:00:29 1

169.254.0.3 4 65003 4 5 0 0 0 00:00:29 1

Total number of neighbors 2

And after the convergence is achieved, we can see also all the routes announced by the Mellanox SN 2010 running Microsoft Azure SONiC and supporting network functions with Cisco IOS XR and Nokia SR OS onboard:

2

3

4

5

6

7

8

9

10

11

12

13

14

BGP table version is 3, local router ID is 10.0.0.2, vrf id 0

Default local pref 100, local AS 65002

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.1/32 169.254.0.0 0 65001 i

*> 10.0.0.2/32 0.0.0.0 0 32768 i

*> 10.0.0.3/32 169.254.0.3 0 0 65003 i

Displayed 3 routes and 3 total paths

Now the topology looks fine and we can verify the end to end reachability. We do it by pinging the Nokia VSR and Mellanox/SONiC device from Cisco IOS XRv:

2

3

4

5

6

7

8

9

10

11

12

Sun Dec 15 00:51:57.090 UTC

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.0.0.1, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 1/2/9 ms

RP/0/0/CPU0:XR1#ping 10.0.0.2 so 10.0.0.3

Sun Dec 15 00:52:00.030 UTC

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.0.0.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 1/1/1 ms

The basic configuration scenario of the Microsoft Azure SONiC on the Mellanox SN 2010 switch is done, as well as the integration with Cisco IOS XR and Nokia SR OS for interoperability.

You can find the topology and the relevant files on my GitHub page.

Lessons learned

Despite it took me quite a while to configure the SONiC, but the experience I have collected was an outstanding. The fact that the whole configuration could be represented as a single JOSN file make the device under SONiC control a real network function and part of the application, as you describe it in way the configuration file of your application.

The second lessons learned is that even the official documentation might be not (yet) accurate, therefore you can spend a lot of time looking how you can fix the notworking things. SONiC is not yet that popular, therefore there is a huge lack of the examples, which I’m planning to fix.

Conclusion

Microsoft Azure SONiC is a way different network operation system comparing to many things we have covered before. It is really interestingly developed, and area of its applicability might be broader than only the hyperscalers. It merges inside itself the networking, servers’ management and containers. And I think, this is proper way of the development of the Open Networking. In the next year we’ll talk more about that.

I wish you marvellous Christmas with your family, friends and loved ones! And all the best in the New Year, friends! Take care and goodbye!

Support us

P.S.

If you have further questions or you need help with your networks, I’m happy to assist you, just send me message. Also don’t forget to share the article on your social media, if you like it.

BR,

Anton Karneliuk

JUNIPER cRPD with Sonic would be a great post.

Hey Tarcisio,

we’ll take a look in future. We haven’t written anything about Juniper (mainly due to the absence of available VMs), but we might change that in future.

Cheers,

Anton

Hi Antom,

NIce post.

BTW, do you have any idea about SR-TE and RSVP-TE interoepration?

Thanks in advance