Hello my friend,

It is been a while since we posted our last blogpost, which was touching Infrastructure aspects of building Multi Server Cloud with ProxMox. After summer break we continue our blogging and developing activities at Karneliuk.com. Today we’ll show some backstage of the software development of the pygnmi.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

What is Automation? Network Automation?

In a nutshell, Automation and Network Automation are just subset of tasks from a broader topic called Software Development. With the move of the world towards Industry 4.0, the digital economy growth and others, all sort of applications are becoming more widely used. In order you create a history, and not being a part of that in the past, you shall know the principles of software development and be able to create applications yourselves. Sounds complicated? It may be, indeed. However, with our Network Automation Training you definitely will have a break-through in the software development world.

At our trainings, advanced network automation and automation with Nornir (2nd step after advanced network automation), we give you detailed knowledge of all the technologies relevant:

- Success and failure strategies to build the automation tools.

- Principles of software developments and tools for that.

- Data encoding (free-text, XML, JSON, YAML, Protobuf)

- Model-driven network automation with YANG, NETCONF, RESTCONF, GNMI.

- Full configuration templating with Jinja2 based on the source of truth (NetBox).

- Best programming languages (Python, Bash) for developing automation, configuration management tools (Ansible) and automation frameworks (Nornir).

- Network automation infrastructure (Linux, Linux networking, KVM, Docker).

Great class. Anton is top notch. Looking forward to more offerings from Karneliuk.com

Rasheim Myers @ Cisco Systems

Moreover, we put all mentions technologies in the context of the real use cases, which our team has solved and are solving in various projects in the service providers, enterprise and data centre networks and systems across the Europe and USA. That gives you opportunity to ask questions to understand the solutions in-depts and have discussions about your own projects. And on top of that, each technology is provided with online demos and you are doing the lab afterwards to master your skills. Such a mixture creates a unique learning environment, which all students value so much. Join us and unleash your potential.

Brief Description

Some time ago we shared some ideas about automated network testing with pygnmi and pytest libraries. In that blogpost we explained, that we are using pytest for two purposes:

- For the automated testing of the network configuration.

- For the automated testing of parts of our software (e.g., pygnmi itself), when we develop that.

The first use case we covered in the before mentioned blogpost, whereas today we’ll take a look more on the second one.

Refer to the original blogpost for further details on network configuration testing.

Today, we’ll talk about the second use case. Now, you may think, what is the advantage of the automated software testing with separate tools, if we can test ourselves manually? There are two good answers to this question:

- Speed. You get the results of your tests almost instantly (just matter fo seconds) rather than hours when you analyse the outcome of tests manually.

- Consistency. Once you define test cases, you don’t worry anymore if you forget testing something. Time to time you would just need to validate if your test cases are still accurate or not.

From our project perspective, pygnmi, automated tests have another important benefit. We are quite proud that not only Team Karneliuk.com contributes to its development, but there is a number of other contributors world wide making our project truly open source. That enables much quicker development of the pygnmi compared to what it would have Benn if only we were developing that. On the other hand, being ultimately responsible for the stability of the pygnmi, we are genuinely interested in each release to be thoroughly tested. As such, we can focus on understanding the created code by contributors and developing the unit tests for them to make sure that new features and improvements of existing works properly.

Okay, by this point you may be thinking, that automated tests for software development is a cool thing. However, what is the coverage of tests? Coverage is a metric showing how extensively we have tested our software. In essence, it shows percentage of the lines of code from the target module or modules used in your unit tests. The higher this percentage, the better it is, as it ultimately means that your module is tested more in-depth. Sounds good, isn’t it?

Is there also a possibility to analyse the coverage of unit tests with pytest in an automated way? Yes, it is possible. To do that, we could use another Python library called coverage.py. This library allows you to:

- collect results of the pytest execution;

- analyse which lines of code from a target module or modules were executed during the pytest run;

- produce a report showing the coverage in percentage as well which lines were not used.

The outcome of the report can act as a guide for you in unit test developing to make sure you test everything (if you want to achieve 100% coverage). Let’s see how it works.

Lab Setup

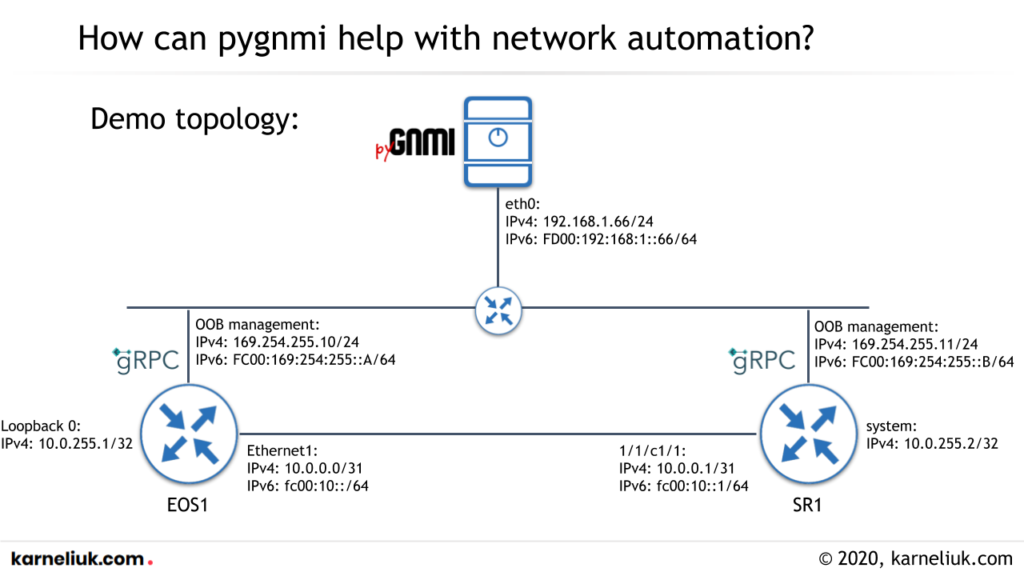

For this blogpost, as for other blogposts in pygnmi series, we are using the following topology:

At the current moment, we are running automated tests only against Arista EOS due to nature of development lab setup. In future we’ll extend coverage for several major vendors.

At our Zero-To-Hero Network Automation Training you will learn how to build and automate your lab setup.

Coverage.py Usage

Coverage.py is a Python package created to calculate the percentage of software module with unit tests. As such, it is being using together with some unit test tool (e.g., pytest). Once being used, it can show you the effectiveness of your unit tests and give you clear guidelines, which parts of code were and were not tested.

Step #1. Basic Usage

It is important to emphasise, that coverage.py doesn’t replace unit testing package pytest neither do the unit tests itself. Therefore, it is important to create the unit tests with pytest first.

Read the details how to created tests for pytest in one of the previous blogposts.

After unit tests are created, it is very simple to start using the coverage.py package. First of all, you need to install it from PyPI:

Once installed, you can execute your unit tests leveraging pytest via this module as simple as:

2

3

4

5

6

7

8

9

10

=================================== test session starts ====================================

platform linux -- Python 3.9.2, pytest-6.2.4, py-1.10.0, pluggy-0.13.1

rootdir: /home/aaa/Documents/D/Dev/pygnmi

collected 9 items

tests/test_connect_methods.py .. [ 22%]

tests/test_functionality.py ....... [100%]

==================================== 9 passed in 3.77s =====================================

As you see, we specify that we us unit test based in pytest inside coverage. The tests are conducted and all of them are passed, which is good. However, at this stage you don’t see any signs of percentage of the tests coverage…

To see that values, or to be precise – values, we need to generate report. During the previous execution of coverage run -m pytest, there was a new file .coverage created in your folder. This is a binary file having a lot of information, which is an input for report generation. To run the report, use the following command:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

Name Stmts Miss Cover Missing

-------------------------------------------------------------------------------------------------------------------------

pygnmi/__init__.py 1 0 100%

pygnmi/client.py 589 288 51% 54-55, 57, 75-77, 81-88, 95-97, 109-111, 132-134, 139-141, 159, 161, 177-186, 225-232, 237-244, 248-249, 253-255, 260-261, 267-269, 274-276, 317-345, 353-362, 394-395, 402-408, 419-434, 445-460, 466-468, 473-475, 502, 512, 521-532, 538-547, 552, 557, 562, 566, 571-576, 585, 594, 598, 603, 617, 624, 628, 633, 639, 643, 648, 651, 658, 672, 675-682, 685-698, 704-706, 711-714, 717-720, 723-726, 766-782, 791-800, 803, 812-816, 819-828, 831, 834, 840, 852-855, 861, 869-870, 883-884, 887-891, 907, 919-920, 930-932, 942-955, 977, 981, 984, 989-1011, 1017-1025

pygnmi/path_generator.py 36 0 100%

pygnmi/spec/gnmi_ext_pb2.py 44 0 100%

pygnmi/spec/gnmi_pb2.py 251 0 100%

pygnmi/spec/gnmi_pb2_grpc.py 29 15 48% 52-54, 63-65, 73-75, 84-86, 90-114

tests/__init__.py 0 0 100%

tests/messages.py 4 0 100%

tests/test_address_types.py 3 0 100%

tests/test_connect_methods.py 31 0 100%

tests/test_functionality.py 190 0 100%

venv/lib/python3.9/site-packages/_pytest/__init__.py 5 2 60% 5-8

venv/lib/python3.9/site-packages/_pytest/_argcomplete.py 37 23 38% 77, 81-98, 102-109

venv/lib/python3.9/site-packages/_pytest/_code/__init__.py 10 0 100%

venv/lib/python3.9/site-packages/_pytest/_code/code.py 699 450 36% 48-51, 67, 78, 85, 90, 92-95, 100-101, 106, 115-120, 130, 134, 138, 142, 146, 151-153, 162-164, 168, 176-182, 195-197, 201, 204-205, 209, 213, 216, 221-223, 228, 233, 236, 242-260, 273-289, 292-302, 312, 324-335, 353-367, 371, 375, 378-381, 395, 399-403, 408-428, 467-475, 492-497, 502, 506-507, 512-515, 520-523, 528-531, 536-539, 544-546, 550, 553-555, 567-573, 582, 585-588, 629-648, 656-662, 684-695, 698-701, 704-709, 719-737, 745-754, 757-780, 787-815, 818-825, 828-848, 865-890, 895-939, 947-950, 953, 956, 967, 970, 973-975, 987-991, 994-999, 1008-1009, 1022-1036, 1041-1043, 1052, 1077-1105, 1108-1127, 1130, 1144-1149, 1157-1158, 1166-1181, 1196, 1200-1213, 1242-1259

venv/lib/python3.9/site-packages/_pytest/_code/source.py 142 104 27% 24-38, 41-43, 50, 54, 57-64, 67, 70, 74-81, 86-88, 93-94, 99-102, 106-108, 111, 120-126, 133-139, 143, 149-165, 174-212

venv/lib/python3.9/site-packages/_pytest/_io/__init__.py 3 0 100%

!

! A LOT OF OUTPUT IS TRUNCATED FOR BREVITY

!

venv/lib/python3.9/site-packages/py/_vendored_packages/__init__.py 0 0 100%

venv/lib/python3.9/site-packages/py/_vendored_packages/apipkg/__init__.py 152 55 64% 22, 30-36, 65-67, 74, 86-90, 128-135, 142-143, 149, 156-157, 166-175, 182-187, 192-195, 198-201, 204, 207

venv/lib/python3.9/site-packages/py/_vendored_packages/apipkg/version.py 1 0 100%

venv/lib/python3.9/site-packages/py/_version.py 1 0 100%

venv/lib/python3.9/site-packages/pytest/__init__.py 60 0 100%

venv/lib/python3.9/site-packages/pytest/__main__.py 3 0 100%

venv/lib/python3.9/site-packages/pytest/collect.py 21 5 76% 30, 33-36

venv/lib/python3.9/site-packages/six.py 504 264 48% 49-72, 77, 103-104, 117, 123-126, 136-138, 150, 159-162, 165-166, 190-192, 196, 200-203, 206-217, 226, 232-233, 237, 240, 324, 504, 512, 517-523, 535-541, 546-548, 554-556, 561, 566, 570-584, 599, 602, 608, 616-632, 644, 647, 661-663, 669-689, 695, 699, 703, 707, 714-737, 753-754, 759-811, 813-820, 830-850, 869-871, 882-895, 909-913, 928-936, 950-955, 966-973, 994-995

-------------------------------------------------------------------------------------------------------------------------

TOTAL 33079 18252 45%

The output is relatively long and overall:

- You can see the coverage of used code lines per each Python file

- You can see the average code coverage.

The immediate though you may have, there are so many unrelated Python files being checked. How can we improve the the signal/noise ratio?

Step #2. Limiting the Reported Scope

Coverage.py has opportunity to get extra attributes, when you run unit tests. For example, you can specify only the interested files/folders, which shall be tracked in terms of coverage. Or, other way around, you can exclude certain files. However, first of all we need to delete the results of previous coverage.py run:

Once you are ready for the new run, let’s add some more arguments. In our case, we would use key –include to provide the names of the directories and/or specific files and –omit key to exclude files/folder correspondingly:

2

3

4

5

6

7

8

9

10

=================================== test session starts ====================================

platform linux -- Python 3.9.2, pytest-6.2.4, py-1.10.0, pluggy-0.13.1

rootdir: /home/aaa/Documents/D/Dev/pygnmi

collected 9 items

tests/test_connect_methods.py .. [ 22%]

tests/test_functionality.py ....... [100%]

==================================== 9 passed in 3.53s =====================================

What we have done is:

- we added –include key, which points towards pygmni folder, which contains the pygnmi module

- we added –omit key, which excludes spec subdirectory from pygnmi directory. The reason for that is that spec contains original OpenConfig GNMI specification modules and we are not test those original modules.

Let’s validate the new report:

2

3

4

5

6

7

8

Name Stmts Miss Cover Missing

--------------------------------------------------------

pygnmi/__init__.py 1 0 100%

pygnmi/client.py 589 288 51% 54-55, 57, 75-77, 81-88, 95-97, 109-111, 132-134, 139-141, 159, 161, 177-186, 225-232, 237-244, 248-249, 253-255, 260-261, 267-269, 274-276, 317-345, 353-362, 394-395, 402-408, 419-434, 445-460, 466-468, 473-475, 502, 512, 521-532, 538-547, 552, 557, 562, 566, 571-576, 585, 594, 598, 603, 617, 624, 628, 633, 639, 643, 648, 651, 658, 672, 675-682, 685-698, 704-706, 711-714, 717-720, 723-726, 766-782, 791-800, 803, 812-816, 819-828, 831, 834, 840, 852-855, 861, 869-870, 883-884, 887-891, 907, 919-920, 930-932, 942-955, 977, 981, 984, 989-1011, 1017-1025

pygnmi/path_generator.py 36 0 100%

--------------------------------------------------------

TOTAL 626 288 54%

Now we see only Python modules we have created ourselves, which are the heart of pygnmi. Some of them have 100% coverage by our unit tests with pytest, whilst the main client is just 51%. How can we improve that number?

Step #3. Increasing Coverage

Let’s carefully analyse the output above. You may see the column Missing, which contains lines of code, which were not executed. The more lines you have, which are not executed, the less your coverage is. How can you increase amount of code executed? The answer is straightforward:

- Either you add more logic to your existing unit tests

- Or you create more new tests to cover those missing lines

We will show you here the second approach. Let’s take a look on missing lines 54-55 and 57 from pygnmi/client.py:

2

3

4

5

54 self.__target = target

55 self.__target_path = target[0]

56 elif re.match('.*:.*', target[0]):

57 self.__target = (f'[{target[0]}]', target[1])

Those ones are related to various type of how the name connectivity date (IPv4/IPv6 address or FQDN and port). They are not being executed, as in our case for testing we provide the connectivity data as FQDN and parts for UNIX-socket or IPv6 aren’t used. Let’s fix IPv6 part.

In our .env file, which stores connectivity data we add a new variables. Current state:

2

PYGNMI_HOST="EOS1"

Modified state of .env file:

2

3

4

PYGNMI_HOST="EOS425"

PYGNMI_HOST_2="169.254.255.10"

PYGNMI_HOST_3="fc00:169:254:255::A"

So we have created 2 new variables, and we will create a new file with unit tests to utilise those variables:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

#!/usr/bin/env python

# Modules

from pygnmi.client import gNMIclient

from dotenv import load_dotenv

import os

# User-defined functions (Tests)

def test_fqdn_address():

load_dotenv()

username_str = os.getenv("PYGNMI_USER")

password_str = os.getenv("PYGNMI_PASS")

hostname_str = os.getenv("PYGNMI_HOST")

port_str = os.getenv("PYGNMI_PORT")

path_cert_str = os.getenv("PYGNMI_CERT")

gc = gNMIclient(target=(hostname_str, port_str), username=username_str, password=password_str, path_cert=path_cert_str)

gc.connect()

result = gc.capabilities()

gc.close()

assert "supported_models" in result

assert "supported_encodings" in result

assert "gnmi_version" in result

def test_ipv4_address_override():

load_dotenv()

username_str = os.getenv("PYGNMI_USER")

password_str = os.getenv("PYGNMI_PASS")

hostname_str = os.getenv("PYGNMI_HOST_2")

port_str = os.getenv("PYGNMI_PORT")

path_cert_str = os.getenv("PYGNMI_CERT")

gc = gNMIclient(target=(hostname_str, port_str), username=username_str, password=password_str, path_cert=path_cert_str, override="EOS425")

gc.connect()

result = gc.capabilities()

gc.close()

assert "supported_models" in result

assert "supported_encodings" in result

assert "gnmi_version" in result

def test_ipv6_address_override():

load_dotenv()

username_str = os.getenv("PYGNMI_USER")

password_str = os.getenv("PYGNMI_PASS")

hostname_str = os.getenv("PYGNMI_HOST_3")

port_str = os.getenv("PYGNMI_PORT")

path_cert_str = os.getenv("PYGNMI_CERT")

gc = gNMIclient(target=(hostname_str, port_str), username=username_str, password=password_str, path_cert=path_cert_str, override="EOS425")

gc.connect()

result = gc.capabilities()

gc.close()

assert "supported_models" in result

assert "supported_encodings" in result

assert "gnmi_version" in result

As you see, tests are fairly identical:

- we make connection to device over gNMI

- we do capabilities() call

- we terminate connection

- we validate if the needed information is provided in the output

In all three tests we validate the capabilities call, as it is the most simple one. What is different between three those functions is that we are going to use:

- FQDN

- IPv4 address

- IPv6 address

to connect to devices.

Let’s erase the previous results, re-run the unit tests with pytest and coverage.py and check the results:

2

3

4

5

6

7

8

9

10

11

=================================== test session starts ====================================

platform linux -- Python 3.9.2, pytest-6.2.4, py-1.10.0, pluggy-0.13.1

rootdir: /home/aaa/Documents/D/Dev/pygnmi

collected 12 items

tests/test_address_types.py ... [ 25%]

tests/test_connect_methods.py .. [ 41%]

tests/test_functionality.py ....... [100%]

==================================== 12 passed in 9.46s ====================================

You can see that we have 3 more tests now. What about the coverage?

2

3

4

5

6

7

8

Name Stmts Miss Cover Missing

--------------------------------------------------------

pygnmi/__init__.py 1 0 100%

pygnmi/client.py 589 287 51% 54-55, 75-77, 81-88, 95-97, 109-111, 132-134, 139-141, 159, 161, 177-186, 225-232, 237-244, 248-249, 253-255, 260-261, 267-269, 274-276, 317-345, 353-362, 394-395, 402-408, 419-434, 445-460, 466-468, 473-475, 502, 512, 521-532, 538-547, 552, 557, 562, 566, 571-576, 585, 594, 598, 603, 617, 624, 628, 633, 639, 643, 648, 651, 658, 672, 675-682, 685-698, 704-706, 711-714, 717-720, 723-726, 766-782, 791-800, 803, 812-816, 819-828, 831, 834, 840, 852-855, 861, 869-870, 883-884, 887-891, 907, 919-920, 930-932, 942-955, 977, 981, 984, 989-1011, 1017-1025

pygnmi/path_generator.py 36 0 100%

--------------------------------------------------------

TOTAL 626 287 54%

The line 57 now is removed from Missing column, meaning that this part of code was executed. Thinking about what you are willing to cover, you shall be creating new unit tests.

Step #4. Changing the Output Format

The last point we’ll tackle in this blogpost is the output format. The one you have seen above us good for humans, but it is not necessary suitable if you want to build automated pipelines. In the latter case, the JSON output is more preferable. Actually, coverage.py can give you that output:

This command generates the file called coverage.json. This file contains more detailed representation of the unit test report:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

{

"meta": {

"version": "5.5",

"timestamp": "2021-09-03T20:52:34.302178",

"branch_coverage": false,

"show_contexts": false

},

"files": {

"pygnmi/__init__.py": {

"executed_lines": [3],

"summary": {

"covered_lines": 1,

"num_statements": 1,

"percent_covered": 100.0,

"missing_lines": 0,

"excluded_lines": 0

},

"missing_lines": [],

"excluded_lines": []

},

"pygnmi/client.py": {

"executed_lines": [4, 5, 6, 9, 10, 11, 12, 13, 14, 15, 18, 19, 20, 23, 27, 28, 31, 32, 35, 42, 43, 44, 45, 46, 47, 48, 49, 50, 52, 53, 56, 57, 59, 62, 66, 69, 74, 80, 90, 91, 92, 93, 100, 101, 102, 103, 104, 105, 106, 107, 113, 115, 116, 118, 121, 126, 128, 129, 131, 136, 138, 143, 144, 146, 147, 149, 150, 152, 153, 155, 156, 157, 158, 160, 162, 163, 165, 167, 169, 170, 172, 174, 175, 189, 216, 218, 219, 220, 222, 223, 224, 234, 235, 236, 246, 247, 251, 257, 258, 259, 263, 264, 266, 271, 273, 278, 279, 281, 282, 284, 285, 287, 289, 290, 292, 293, 295, 296, 297, 298, 299, 300, 302, 303, 304, 306, 308, 311, 313, 314, 315, 347, 349, 351, 365, 388, 389, 390, 391, 393, 397, 398, 399, 400, 410, 411, 412, 413, 414, 415, 417, 418, 436, 437, 438, 439, 440, 441, 443, 444, 462, 463, 465, 470, 472, 477, 478, 480, 481, 483, 484, 486, 487, 488, 489, 490, 491, 493, 494, 495, 497, 499, 504, 505, 506, 507, 508, 509, 510, 514, 516, 518, 534, 549, 551, 553, 556, 559, 560, 565, 568, 569, 570, 579, 580, 582, 583, 588, 589, 591, 592, 597, 600, 601, 606, 607, 613, 614, 616, 620, 621, 623, 627, 630, 632, 634, 637, 638, 641, 645, 646, 650, 653, 655, 656, 660, 663, 665, 669, 671, 674, 684, 700, 701, 703, 708, 710, 716, 722, 728, 734, 736, 737, 740, 741, 743, 744, 752, 784, 802, 805, 818, 830, 833, 836, 842, 846, 857, 863, 872, 873, 882, 886, 893, 894, 906, 909, 910, 918, 924, 929, 934, 935, 936, 937, 939, 941, 957, 958, 960, 961, 962, 963, 964, 965, 967, 969, 970, 972, 974, 979, 980, 983, 986, 987, 1013, 1015],

"summary": {

"covered_lines": 302,

"num_statements": 589,

"percent_covered": 51.27334465195246,

"missing_lines": 287,

"excluded_lines": 0

},

"missing_lines": [54, 55, 75, 76, 77, 81, 82, 83, 84, 85, 86, 87, 88, 95, 96, 97, 109, 110, 111, 132, 133, 134, 139, 140, 141, 159, 161, 177, 178, 179, 181, 183, 184, 186, 225, 226, 227, 228, 229, 230, 232, 237, 238, 239, 240, 241, 242, 244, 248, 249, 253, 254, 255, 260, 261, 267, 268, 269, 274, 275, 276, 317, 318, 320, 321, 323, 324, 326, 327, 329, 330, 332, 333, 335, 336, 338, 339, 341, 342, 344, 345, 353, 354, 355, 357, 359, 360, 362, 394, 395, 402, 403, 404, 407, 408, 419, 420, 421, 422, 423, 424, 425, 426, 429, 430, 433, 434, 445, 446, 447, 448, 449, 450, 451, 452, 455, 456, 459, 460, 466, 467, 468, 473, 474, 475, 502, 512, 521, 522, 524, 525, 526, 528, 530, 531, 532, 538, 539, 540, 541, 543, 544, 545, 546, 547, 552, 557, 562, 566, 571, 572, 573, 574, 576, 585, 594, 598, 603, 617, 624, 628, 633, 639, 643, 648, 651, 658, 672, 675, 676, 677, 679, 682, 685, 686, 687, 688, 689, 690, 693, 695, 698, 704, 705, 706, 711, 712, 713, 714, 717, 718, 719, 720, 723, 724, 725, 726, 766, 771, 774, 775, 776, 777, 778, 779, 781, 782, 791, 792, 794, 795, 796, 797, 798, 800, 803, 812, 813, 814, 815, 816, 819, 820, 821, 822, 823, 824, 826, 827, 828, 831, 834, 840, 852, 853, 854, 855, 861, 869, 870, 883, 884, 887, 888, 889, 891, 907, 919, 920, 930, 931, 932, 942, 943, 944, 945, 946, 948, 950, 951, 953, 955, 977, 981, 984, 989, 990, 992, 993, 995, 996, 998, 999, 1001, 1002, 1004, 1005, 1007, 1008, 1010, 1011, 1017, 1018, 1020, 1022, 1023, 1025],

"excluded_lines": []

},

"pygnmi/path_generator.py": {

"executed_lines": [5, 6, 9, 10, 11, 12, 13, 16, 17, 18, 20, 21, 22, 23, 24, 25, 27, 28, 30, 32, 33, 35, 36, 37, 38, 40, 41, 43, 44, 45, 46, 48, 49, 51, 54, 56],

"summary": {

"covered_lines": 36,

"num_statements": 36,

"percent_covered": 100.0,

"missing_lines": 0,

"excluded_lines": 0

},

"missing_lines": [],

"excluded_lines": []

}

},

"totals": {

"covered_lines": 339,

"num_statements": 626,

"percent_covered": 54.153354632587856,

"missing_lines": 287,

"excluded_lines": 0

}

}

As this is a JSON file, it is relatively easy to import that in Python, Ansible or any other tool.

Refer to the documentation for further output formats.

If You Prefer Video

You can watch demos from pygnmi articles in our YouTube channel. However, this specific blogpost doesn’t have video.

Examples in GitHub

You can find this and other examples in our GitHub repository.

Lessons Learned

The biggest lessons learned from this blogpost is that coverage is a helpful metric, but metric. What does that mean? It means that with the help of coverage.py you can see what lines of code you have used with your unit tests and what not. You may achieve 100% code’s coverage, but it is up to you to define, whether this is something you are after. Probably 50-60% is enough provided you tested the most critical components. Don’t be driven by metrics. Drive the metrics yourself.

Conclusion

Software development is an interesting yet complicated topic. There are tons of various aspects. Many of them we we cover at our Network Automation Training, and you can benefit from that education. Coverage.py and pytest together are very useful helpers to make sure your software is rock solid and fit for use in production systems. Take care and good bye.

Support us

P.S.

If you have further questions or you need help with your networks, we are happy to assist you, just send us a message. Also don’t forget to share the article on your social media, if you like it.

BR,

Anton Karneliuk