Hello my friend,

yes, we are nerds. Despite the Christmas holidays we continue working and delivering value. After all, Guido von Rossum has created Python over Christmas holidays. We find that very inspiring to be honest and, therefore, decided to look into something appealing as well.

2

3

4

5

retrieval system, or transmitted in any form or by any

means, electronic, mechanical or photocopying, recording,

or otherwise, for commercial purposes without the

prior permission of the author.

How to automate the automation?

Is that even a right term “automating the automation”? It is quite right, yes. It ultimately means the capability to be able to invoke automation workflows not only manually by the automation operator, but also in an automated way: via API request (e.g., called from a customer self-service portal or web hook from some other application) or certain event or condition (e.g., based on syslog, SNMP streaming telemetry). For various types of the application (Ansible or Python) there are different automation platforms (AWX, Apache Airflow, StackStorm) existing, which ultimately fulfil that task.

And in our trainings you will get an exposure in some of them to a degree you will be able to install it from scratches and start using it for your purposes.

We offer the following training programs:

- Zero-to-Hero Network Automation Training

- Automation with Nornir (2nd step after advanced network automation)

- Automation Orchestration wth AWX

During these trainings you will learn the following topics:

- Success and failure strategies to build the automation tools.

- Principles of software developments and tools for that.

- Data encoding (free-text, XML, JSON, YAML, Protobuf)

- Model-driven network automation with YANG, NETCONF, RESTCONF, GNMI.

- Full configuration templating with Jinja2 based on the source of truth (NetBox).

- Best programming languages (Python, Bash) for developing automation, configuration management tools (Ansible) and automation frameworks (Nornir).

- Network automation infrastructure (Linux, Linux networking, KVM, Docker).

- Orchestration of automation workflows with AWX and its integration with NetBox, GitHub, as well as custom execution environments for better scalability.

All programming concepts are very well grounded and explained in a straightforward way, which facilitates understanding

Andre Menzes @ Nokia

Moreover, we put all mentions technologies in the context of the real use cases, which our team has solved and are solving in various projects in the service providers, enterprise and data centre networks and systems across the Europe and USA. That gives you opportunity to ask questions to understand the solutions in-depts and have discussions about your own projects. And on top of that, each technology is provided with online demos and you are doing the lab afterwards to master your skills. Such a mixture creates a unique learning environment, which all students value so much. Join us and unleash your potential.

Brief Description

In the end of the summer 2021, NVIDIA Networking (in this case, former Cumulus), has released a new version of Cumulus Linux 4.4, which for the first time introduces a model-driven automation. Quite frankly, we have been waiting for a long time for it to happen, as I remember talking to Cumulus engineers about Model-Driven Automation from the perspective of NETCONF/YANG (with OpenConfig YANG modules) back in 2018. At that time we didn’t have time to test it and found a self-excuse: NVIDIA themselves didn’t recommend it to be brought to production.

In mid December 2021, NVIDIA released a new generation of Cumulus Linux – 5.0, where this feature now is considered to be a production ready and we simply cannot pass it by.

So, the feature is called NVUE – NVIDIA User Experience. We are not too sure, why they decided to call it this way, as it doesn’t give much clarity what is experience the user will get. Let’s take a look in the official description:

2

3

4

5

6

7

complete Cumulus Linux system (hardware and software)

providing a robust API that allows for multiple interfaces

to both view (show) and configure (set and unset) any

element within a system running the NVUE software.

The NVUE command line interface (CLI) and the REST API

leverage the same API to interface with Cumulus Linux.

That definition gives us more clarity, so in a nutshell, NVUE is:

- An API schema.

- A REST API, which relies on that schema and gives possibility to manage the Cumulus Linux.

- A CLI, which operates on top on the same schema, as REST API.

Interesting enough, nowhere in the documentation of NVUE we could find a word “YANG”, which is a standard for specification of the data models in network automation. However, we found this note in some other official NVIDIA blog:

This API-driven infrastructure defines an object model for every element in the NOS, and exposes a brand new CLI and a REST API. This new infrastructure enables adding more clients, and with all the API clients having the interface to the same models, it is our first step towards the support of standard YANG models and other programmable APIs in the future.

That gives us hope that in future the YANG modules will be implemented. However, the fact that YANG modules are not implemented at this stage, is not that bad. We finally have some data model in a form of API schemas, which is implemented consistently across CLI and API…

… If you read our blog for a while, it may sound as a dejavu for you. This is exactly the same approach Nokia took back in 2018 by introducing of model-driven CLI (MD-CLI). Since that time, there are two CLIs in Nokia: classical CLI with pre Nokia SR OS 16.0 syntax, which is not based on any YANG modules, and MD-CLI, which has new syntax, which is based on new YANG modules.

This analogy is important, because in Cumulus Linux we now have also two CLIs:

- the one we used previously, where commands starting with the net keyword.

- the new one, which starts with the nv keyword.

It is recommended not to mix these two together.

What’s in the backend?

Here nothing is changed, apparently. The NVUE manages the same text files (e.g., /etc/network/interfaces, /etc/frr/frr.conf) and interacts with the same daemons (e.g, network.services and frr.services), as the original net CLI does.

Talk is cheap, show the code. Let’s take a look how we get stared with model-driven automation in Cumulus Linux.

Lab Setup

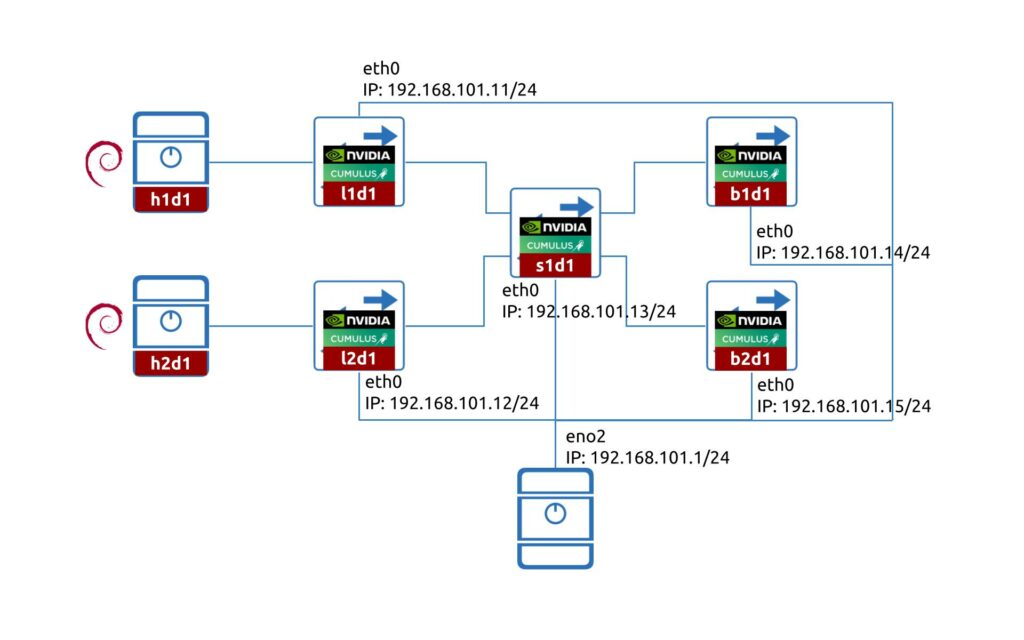

As we plan to automate network running NVIDIA Cumulus 5.0 with NVUE entirely, we built quite a big pod:

We don’t need though all the devices for the purpose of this blogpost and one Cumulus VX 5.0 would be enough.

The lab consist of five switches:

- 2x leafs (l1d1 and l2d1)

- 1x spine (s1d1)

- 2x border leafs (b1d1 and b2d1)

- 2x hosts (h1d1 and h2d1) running Debian Linux 11.1

Scenario Description

In today’s blogpost we’ll touch the surface of the model-driven automation in NVIDIA Cumulus. Namely, we’ll show:

- How to enable NVUE in Cumulus Linux?

- How to collect the information via REST API?

Despite we’ll show how to perform a simple configuration with NV, it is not a focus of this blogpost and we’ll explain that in a separate blogpost.

Step #1. Configure Management Interface with NV

First of all, we need to make sure we are able to connect remotely to the Cumulus Linux device. We’ll use l1d1 in our example. As we are going to explore NVUE from all the sides, we’ll use the nv CLI for that.

Originally Cumulus Linux (using net) had a two stage configuration application:

- You prepare a configuration by sending to a network device a number of commands starting with net.

- You apply the prepared configuration using net commit.

This approach is quite useful, as it allows you to review the configuration and possibly to find typos or even more significant mistakes before changing the operational state of the device.

The same logic is replicated in nv CLI as well. Assuming you have the brand new installation of the Cumulus Linux device:

2

3

cumulus@cumulus:mgmt:~$ nv set interface eth0 ip address 192.168.101.11/24

cumulus@cumulus:mgmt:~$ nv set interface eth0 ip gateway 192.168.101.1

If you are familiar with original Cumulus CLI, the configuration may look like similar, where in a single line you provide all the details to configure with a difference that for nv CLI (NVUE) you use set instead of add in net CLI.

The configuration is not yet applied and you may verify it before applying it using nv config diff:

2

3

4

5

6

7

8

9

10

11

12

- set:

system:

hostname: l1d1

interface:

eth0:

ip:

address:

192.168.101.11/24: {}

gateway:

192.168.101.1: {}

type: eth

As you see, the configuration is a YAML file. What is interesting, though, that some values are implemented as keys themselves (e.g., 192.168.101.11/24 is a key).

We’ll evaluate the NVIDIA Cumulus data model in a separate blogpost.

Once you are happy with the configuration, you can commit it:

It will take a few moments for the configuration to be applied:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

/etc/cumulus/ports.conf has been manually changed since the last save. These changes WILL be overwritten.

/etc/dhcp/dhclient-exit-hooks.d/dhcp-sethostname has been manually changed since the last save. These changes WILL be overwritten.

/etc/hostname has been manually changed since the last save. These changes WILL be overwritten.

/etc/ntp.conf has been manually changed since the last save. These changes WILL be overwritten.

/etc/ptp4l.conf has been manually changed since the last save. These changes WILL be overwritten.

/etc/network/interfaces has been manually changed since the last save. These changes WILL be overwritten.

/etc/frr/frr.conf has been manually changed since the last save. These changes WILL be overwritten.

/etc/frr/daemons has been manually changed since the last save. These changes WILL be overwritten.

The frr service will need to be restarted because the list of router services has changed. This will disrupt traffic.

Warning: current hostname `cumulus` will be replaced with `k8f-l1d1`

/etc/hosts has been manually changed since the last save. These changes WILL be overwritten.

Are you sure? [y/N] y

Broadcast message from root@k8f-l1d1 (somewhere) (Mon Dec 27 19:23:33 2021):

ZTP: Switch has already been configured. /etc/network/interfaces was modified

Broadcast message from root@k8f-l1d1 (somewhere) (Mon Dec 27 19:23:33 2021):

ZTP: ZTP will not run

applied

From our jump host, let’s see if we can reach the Cumulus VX:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

The authenticity of host '192.168.101.11 (192.168.101.11)' can't be established.

ECDSA key fingerprint is SHA256:R+1QS/cHA9eba3BZB74ELNg1cu6RecCmYldQatjJxao.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.101.11' (ECDSA) to the list of known hosts.

cumulus@192.168.101.11's password:

Linux k8f-l1d1 4.19.0-cl-1-amd64 #1 SMP Debian 4.19.205-1+cl5.0.0u1 (2021-12-04) x86_64

Welcome to NVIDIA Cumulus VX (TM)

NVIDIA Cumulus VX (TM) is a community supported virtual appliance designed

for experiencing, testing and prototyping NVIDIA Cumulus' latest technology.

For any questions or technical support, visit our community site at:

https://www.nvidia.com/en-us/support

The registered trademark Linux (R) is used pursuant to a sublicense from LMI,

the exclusive licensee of Linus Torvalds, owner of the mark on a world-wide

basis.

Last login: Mon Dec 27 19:20:46 2021

cumulus@l1d1:mgmt:~$

Connectivity is established and now we can focus on REST API aspect of NVUE.

Step #2. Enable NVUE REST API Service

The REST API service is implemented in NVIDIA Cumulus with the help of NGINX. As such, we need to modify its configuration in the three follow strings

2

3

$ sudo sed -i 's/listen localhost:8765 ssl;/listen 0.0.0.0:8765 ssl;/g' /etc/nginx/sites-available/nvue.conf

$ sudo systemctl restart nginx.service

Those there strings are doing the following things:

- First link creates a symlink between two files.

- The second line modifies the configuration of NGINX to make sure that REST API is being listened on port 8765 not only on a localhost, but also remotely. You can restrict it further whitelisting only your management IP.

- Restart the NGINX server with new configuration file.

Once this configuration is performed, the REST API is enabled and you can start working with that.

Step #3. Collect Information via REST API from NVIDIA Cumulus

The last step is to connect to the network device running NVIDIA Cumulus Linux 5.0 remotely. To do that, we are using curl tool. Here is a simple example:

2

3

4

5

6

7

{

"build": "Cumulus Linux 5.0.0",

"hostname": "l1d1",

"timezone": "Etc/UTC",

"uptime": 69876

}

As arguments to the curl we provide the following:

- -X GET is an HTTP method.

- -u “cumulus:CumulusLinux!” generates Authorization header.

- -k skip the SSL certificate validation.

- URL towards CRUD endpoint.

The CRUD endpoints can be found in the official REST API documentation for Cumulus Linux. It is worth mentioning that it is possible not only to collect information, but also to perform configuration of the network device.

Configuration will be covered in a separate blogpost.

Another shortcut used is -u, which generates the Authorization header using base64 encoding. You can prepare it yourself:

- Using this webpage, convert username:password into base64 encoding.

- Create a header “Authorization”: “Basic XXX”, where XXX is a value generated in the previous step.

That how you can modify the curl call:

2

3

4

5

6

7

{

"build": "Cumulus Linux 5.0.0",

"hostname": "l1d1",

"timezone": "Etc/UTC",

"uptime": 71918

}

With Ansible or Python it would be possible to perform base64 encoding.

Lessons Learned

Whilst we were preparing this blogpost, we were looking for details, what -u key does with the header. What helped us a lot is the verbosity output of the curl using -vvv flag:

2

3

4

5

6

7

8

9

10

11

12

!

! SOME OUTPUT IS TRUNCATED FOR BREVITY

!

* Server auth using Basic with user 'cumulus'

> GET /nvue_v1/system HTTP/1.1

> Host: 192.168.101.11:8765

> Authorization: Basic Y3VtdWx1czpDdW11bHVzTGludXgh

> User-Agent: curl/7.74.0

> Accept: */*

!

! FURTHER OUTPUT IS TRUNCATED FOR BREVITY

You may see the auto-generated Authorization header in the output above, which suggests the type of the header and the associated value.

Build your own and your network’s stable future with our Network Automation Training.

Conclusion

Having an API and data schema for performing a configuration and collection of the operational state from network devices running NVIDIA Cumulus is very good step towards the proper model-driven automation. Finally this capabilities are available in Cumulus as well, what would boost their automation across the world. Take care and good bye.

Support us

P.S.

If you have further questions or you need help with your networks, we are happy to assist you, just send us a message. Also don’t forget to share the article on your social media, if you like it.

BR,

Anton Karneliuk